AI Agent Basics: Let’s Think Step By Step

An introduction to the concepts behind AgentGPT, BabyAGI, LangChain, and the LLM-powered agent revolution.

The story so far: It’s rare that you get to watch an entirely new software paradigm develop and spread in real-time, but this is exactly what’s happening with the proliferation of large language models (LLMs) and the tools being built with them, for them, and by them.

Actually, there are multiple new software paradigms cropping up, and everyone knows this, and everyone is flipping out. Just losing their minds. VCs out here throwing money around like it’s 2021 again. Programmers raising huge rounds on a meme and a prototype. Threadbois making threads. Thinkfluencers ‘fluencing thinking. Contrarians warning that it’s all gonna end in tears.

With this newsletter post, I am asking everyone to please calm down. There is no need for all of this hootin’ and hollerin’.

All the crazy stuff people are predicting will indeed happen, but in like a few weeks or maybe even months, which is basically an eternity in Twitter years. So right now, you can chill out because “GPT agents” are just in their Dotcom mania phase. The real, permanent boom that will change everything — the rise of Amazon and Netflix, social media, the mobile internet, and all that — is multiple whole entire weeks away.

The idea behind the LLM-powered agent is simple: I type a text prompt into a box describing some end goal, using as much detail as I can supply, and then an AI takes over and makes it happen with as little further input from me as possible.

Think of a text-to-image generator like DALL-E 2 or Stable Diffusion, but instead of generating an image from a prompt, the agent generates an entirely new thing in the world — a pizza delivery, an online business, a fully booked vacation, a marketing campaign complete with creative assets and ad buys, etc. Sounds pretty ✨fantastic✨, right?

🤌 Many people are saying it is ✨fantastic✨. Here’s a brief overview of the hype:

On a recent podcast episode, a host shared a story about a guy who gave an LLM-powered agent a budget, internet access, and a mandate to plan a weekend party at a restaurant with a specific headcount, dietary requirements, a budget, and other parameters. The agent did such a great job that the guy planned to book the venue. (Or, at least, I think this is how it all went down, based on my recollection of the host’s recollection of the guy’s recollection of the whole incident.)

I read on Twitter about a guy who used an agent to order a pizza.

Many people are asking agents to create online businesses for them and then to run the business.

An AI builder with a huge newsletter following claims many of us will be working for AI agents in the next few years.

It’s thought that we can probably use some agent-type architecture to build an AGI with existing LLM tech.

Agents can shield you from criminal liability, because if the agent spawns a sub-agent that gets caught breaking the law, then it goes to prison and not the supervisor agent or the human who supplied the prompt. If cops want to nail the human on RICO charges, they have to flip all the sub-agents up the chain until they get to the user.

Some guy made a bot called BabyAGI using this agent concept, and it was like 110 lines of code, and everybody immediately lost it and threw money at him to make it into way more lines of code, and then a few days later OpenAI’s former head of product joined the resulting real company.

I left out links in the above list because I made one of the items up as a joke, and I want to leave it as an exercise for the reader to figure out which one.

🙌 So yeah, people are excited about the agents. But the current agent mania is a little bit like cryptomania — there’s definitely something earthshakingly important there, but it’s a long way (in AI time) from being truly usable in production, and in the meantime, everyone is grabbing at low-hanging fruit in what amounts to publicity stunts that are attracting tons of money. Also, some tiny handful of these publicity stunts will be worth billions and the rest will be worth zero.

Ultracompressed hype cycle aside, agents really are coming and they really do matter, because at the heart of the agent concept is a brand new capability that software has only very recently acquired: the ability to make a plan.

Core agent concepts

The AI literature features different definitions of the term, but for the most part, an agent is something with the following qualities:

Has at least one goal, but usually more than one.

Can observe the present state of its environment.

Can use its observation of its environment to formulate a plan of action that will transform that environment from the present state to a future one where the agent’s goal is achieved.

Can act on its environment in order to carry out its plan, ideally also adjusting the plan as it goes based on continued observations of the changing environment.

Throughout most of the history of software, programmable machines augmented with sensors, data feeds, motors, actuators, and access to external APIs have been able to do every one of the above items except #3.

☑️ The real unlock that makes agents an entirely new software paradigm lies in the modern LLM’s ability to take in a goal, along with a set of facts and constraints, and then create a step-by-step plan for achieving that goal.

Before LLMs, the programmer had to make the plan — a computer program is really just a step-by-step set of actions the machine will need to take to accomplish a goal. But in the LLM era, machines’ newly acquired ability to make their own plans has everyone in a frenzy of either fear or greed.

To dive a little deeper, take a look at the following text:

Feature: Overdue tasks

Let users know when tasks are overdue

Rule: Users are notified about overdue tasks on first use of the day

Background:

Given I have overdue tasks

Example: First use of the day

Given I last used the app yesterday

When I use the app

Then I am notified about overdue tasks

Example: Already used today

Given I last used the app earlier today

When I use the app

Then I am not notified about overdue tasks

🥒 Many programmers will recognize the above as Cucumber tests, and indeed I copied them from the project’s docs. These are automated tests written in a human-readable, English-like language called Gherkin.

In the olden days of, oh, a month ago, a software project manager and various stakeholders would sit around and hash out a document like the above before handing it off to the engineers. This document, which may or may not be written in a formal specification language like Gherkin, is meant to describe all the ways a piece of software (or, more usually, a single feature of a larger piece of software) might behave.

👉 Overall, then, the process of getting machines to accomplish a goal went something like this:

The stakeholder describes a goal to the software product manager.

The product manager and the stakeholder survey the current state of the world — existing software, knowledge of user habits, design patterns, etc. — and formulate a step-by-step plan for how the software should achieve the goal. This plan would fully lay out what the machine should do in both success scenarios (i.e. “the happy path”) and failure scenarios (i.e. “the sad path”)

The software engineers take the plan and write code to implement the plan exactly as specified so that it achieves the stakeholder’s original goal.

We’ll refer to the above as the legacy paradigm for using software to accomplish goals.

In contrast, the new agent paradigm works as follows:

The stakeholder describes a goal to the agent.

The agent searches the internet, queries the stakeholder, and/or draws on its training to understand the state of the world and formulate a step-by-step plan for achieving the goal.

The agent takes a set of actions to achieve the stakeholder’s original goal.

Compare the first list to the second and think about how many fewer humans are involved in this newer way of making computers do things. Yeah, there’s about to be either a lot more software out there in the world, or way fewer programmers, or both.

🏭 But before we get too excited, we should realize that this new, agent-powered way of doing things has a pretty serious tradeoff: it uses a few orders of magnitude more computer power than the first method to accomplish the same set of actions.

Every time the agent uses an LLM to run an inference, it can cost up to a few cents, which means that carrying out a particular sequence of automated actions that would’ve been essentially free under the old paradigm can cost a few dollars under the new paradigm.

For much more on this issue of costs and risks of the agent paradigm vs. the legacy paradigm, see “Appendix A” at the end of this article.

How LLMs make plans

When we trained the first LLMs, we didn’t know these bundles of math and code would have the ability to make their own detailed plans for achieving a given goal.

‼️ Our language models were trained to complete sentences by filling in a missing word as a way to get them to output natural-sounding language. But their subsequent ability to take in an objective described in natural language and generate a logical, realistic, step-by-step sequence of actions (also described in natural language) for achieving that objective was a surprise.

The ReAct paper from late 2022 (lol so amazing that the paper that really kicked off the agent craze is not even a year old as of this writing) does a good job of laying out the history of the discovery that when LLMs get to be big enough (as measured by parameter count) they gain the ability to reason in a step-by-step fashion.

🤔 This reasoning ability manifests in two ways:

Chain-of-thought reasoning, where the model can be prompted to walk through the logic behind some conclusion or output. (This is the famous “let’s think step-by-step” prompt trick.)

Action planning reasoning, where the model can be prompted to come up with a series of steps that will lead to some future goal.

🤝 The ReAct paper combines both of these types of reasoning into a single prompt in order to coax out of the model conclusions and action plans that higher quality and more grounded in fact (instead of hallucination).

Sophisticated users of LLMs and regular readers of this newsletter will want to understand what all this stuff I’m talking about actually looks like in practice. What does it mean to “combines both of these types of reasoning into a single prompt” so that the LLM is able to output action sequences? What specifically are we doing here? Let’s find out.

Chain of thought reasoning

🔎 To recap a bit from my explainer on ChatGPT, an LLM takes in an input sequence of symbols (the prompt) and uses it in combination with a very carefully sculpted multidimensional blob of probabilities to locate a related output sequence within the space of all possible symbol sequences.

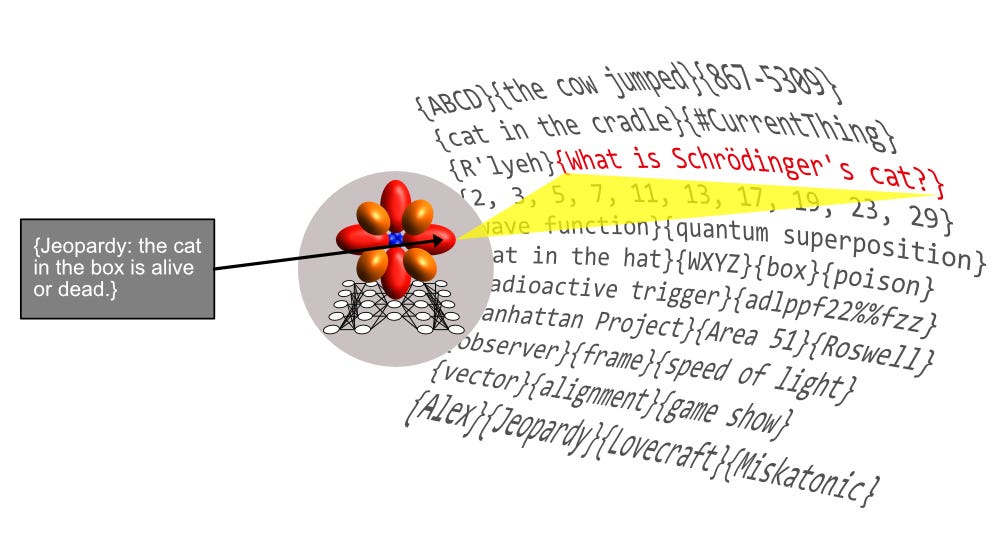

In the image above, my prompt in that gray box is a collection of symbols (noted by the fact that it’s enclosed in curly brackets), of the form: Jeopardy: the cat in the box is alive or dead. The LLM takes that input sequence, and using what it “knows” about the world from its training — knowledge that’s constituted by a set of probabilities that capture relationships between words and concepts — it “figures out” that the input string is an answer from the game show Jeopardy and that the most appropriate output is probably a question about Schrödinger’s cat.

Important: That “Jeopardy:” prefix clued it into the fact it’s looking at a Jeopardy answer.

So what if I fed my LLM a more elaborate prompt like this:

Question: What is the distance from Memphis to Chattanooga?

Answer: The total straight line flight distance from Memphis, TN to Chattanooga, TN is 269 miles.

Question: What is the distance from Paris to Reno?How should the model complete the above prompt?

First, let’s review what we might expect the model to infer from that initial question-and-answer pair about the distance from Memphis to Chattanooga.

The mention of Chattanooga should tip it off that I’m asking about the Memphis that’s in Tennessee, USA, not the one in Egypt.

My example answer shows that I want to know the straight-line distance, not the driving distance.

My answer also indicates I want the distance in miles, not kilometers.

Given these facts, it makes sense that the model will try to complete the prompt by answering that final, unanswered question with a comparison of the straight-line distance between two American cities named “Paris” and “Reno” in miles.

But there are many Parises in the US and many Renos. There’s a Paris in TN, so the model might reasonably start there, but TN has no town named Reno. What to do?

Let’s tweak the prompt a little bit to clear this up:

Question: What is the distance from Memphis to Chattanooga?

Thought: Chattanooga is in TN, and there's a city named Memphis nearby. So we're looking at nearby cities in the same state.

Answer: The total straight line flight distance from Memphis, TN to Chattanooga, TN is 269 miles.

Question: What is the distance from Paris to Reno?I stuck an example of some reasoning into the prompt, prefixed with Thought:, so the model should be better equipped to figure out that I want the distance between a Paris and a Reno that are in the same state.

It turns out that Texas has a Paris and a Reno right next to each other, so assuming the model has been trained to have a detailed knowledge of maps and distances between major landmarks, a reasonable completion might be:

Answer: The total straight line flight distance from Paris, TX to Reno, TX is 6 miles.⭐️ The point: In the same way that you can add a bunch of words like, “trending on Artstation” and “Greg Rutkowski” to a Stable Diffusion prompt to steer the image generation model toward a particular region of latent space, you can also add question/answer pairs, thoughts, observations, and other labeled information to a text-to-text prompt in order to steer an LLM toward a region of latent space that has the concepts and reasoning you’re looking for inside it.

(Again, we learned all this by poking at these models and experimenting. Pretty wild, right?)

👍 Important detail: If you read my CHAT stack explainer, you’ll recognize that all the material prior to the final question in the two example prompts above is a specific type of prompt ingredient with a specific name: context. The main part of the prompt is, “Question: What is the distance from Paris to Reno,” and all the stuff preceding it is context that we’re giving the model so it can make better sense of the prompt.

Action planning

In the previous section, we built an elaborate prompt that contains the following types of context:

Question — an example of a question we might ask.

Thought — an example of the kind of reasoning we’d use to answer the preceding question.

Answer — an example of a desirable answer to the preceding question that follows from the reasoning laid out in the thought.

This is all fine and good, but what if an agent needs to go out and do things in the world — what if it needs to hook into other types of software (or even hardware) to gather fresh knowledge and interact with its environment?

🤷♂️ What if the LLM, in response to our most recent and most elaborate prompt, doesn’t actually have the distances between US towns and cities anywhere in its training data, so it gave us the following answer?

Answer: I'm sorry, but as a friendly Chatbot that hasn't learned much US geography knowledge and doesn't have access to the interent, I cannot tell you the straight line distance in miles from Paris, TX to Reno, TX.🗺️ If we got that response, we might go to Google Maps and finish off the task to get our answer. But it would be better if the computer could make a call to the Google Maps API for us, right?

Imagine a computer program that carries out the following steps:

Feed the long prompt with the question/thought/answer material into the LLM.

Check the LLM’s response for the words, “I’m sorry… I cannot tell you the straight line distance in miles.”

If it has the “I’m sorry…” words, then check Google Maps to get the distance between the two cities it mentioned in that sentence (i.e., 6 miles), add the words “

Observation: My research indicates there's a pair of cities in Texas named Paris and Reno, and they're separated by only 6 miles.” to the context part of the prompt somewhere, and put this new, expanded prompt into the LLM.

So in that last step, we’re re-running the original prompt, but in an expanded form with more context that looks as follows:

Question: What is the distance from Memphis to Chattanooga?

Thought: Chattanooga is in TN, and there's a city named Memphis nearby. So we're looking at nearby cities in the same state.

Answer: The total straight line flight distance from Memphis, TN to Chattanooga, TN is 269 miles.

Observation: My research indicates there's a pair of cities in Texas named Paris and Reno, and they're separated by only 6 miles.

Question: What is the distance from Paris to Reno?This prompt now has enough info for the LLM to spit out the desired answer, which (again) is:

Answer: The total straight line flight distance from Paris, TX to Reno, TX is 6 miles.The more abstract pattern we’re using now is:

Submit the prompt and relevant context to the LLM.

Check the LLM’s response for some indication that it needs to take some action in order to complete the prompt.

Take the action on behalf of the LLM.

Insert the results of the action into a copy of the original prompt as a bit of

Observationcontext. (Note that we also inject the actualActionline, just so the LLM has the full context for what has been done and where theObservationcame from. I didn’t represent this in the above prompt text, though, but I’ll add it below in a moment.)Re-submit the prompt, which is now richer and more informative because it has the results of an action in it.

💪 We can now fill out our list of context types so that it has enough ingredients that if we manage to iteratively assemble all of them into one super-long, elaborate prompt, we can get most types of answers we might look for:

Question — an example of a question we might ask.

Thought — an example of the kind of reasoning we’d use to answer the preceding question.

Answer — an example of a desirable answer to the preceding question that follows from the reasoning laid out in the thought.

Action — a search query, Google Maps query, travel reservation website click, or any other type of thing we might want to do in order to get some results that we can use to fill out an extended prompt with enough context to elicit a complete, correct answer from the model.

Observation — some bit of additional context we gained from performing an action on behalf of the model.

⚙️ To give some pseudocode for those who can read such things, the main program loop for an agent that answers the Paris-to-Reno distance question might look something like this:

prompt = "Question: What is the distance from Paris to Reno?"

context = "Question: What is the disance from Memphis to Chattanooga?\nThought: Chattanooga is in TN..."

full_prompt = context + "\n" + prompt

llm_response = submit_prompt_to_llm(full_prompt)

while is_action?(llm_response) do

observation = perform_action(llm_response)

full_prompt = full_prompt + llm_response + observation

llm_response = submit_prompt_to_llm(full_prompt)

end

print(response)You can see that we’re getting an initial response from the LLM. Then we’re checking to see if that response contains the string “Action:” because if it does then we need to perform an action (which we do with the perform_action() function, which can read an “Action:” string and execute the correct code).

It may be the case that the agent keeps giving us back Action: responses because it needs more info, so we enter a while loop that checks each response to make sure it’s not an Action: response (using an is_action?() function), and if the response is not an action then we assume we’re done and we exit the loop.

We now can execute the pseudocode above to walk through the full Google Maps example, with the initial prompt, the initial response, and the modified ReAct prompt that we re-submit to the LLM to get the final answer.

Example: The first prompt-response pair

Prompt #1 with context:

Question: What is the distance from Memphis to Chattanooga?

Thought: Chattanooga is in TN, and there's a city named Memphis nearby. So we're looking at nearby cities in the same state.

Action: Check Google Maps for the straight-line distance from Memphis, TN to Chattanooga, TN.

Observation: Google Maps says Chattanooga, TN and Mephis, TN are separated by 269 miles.

Answer: The total straight line flight distance from Memphis, TN to Chattanooga, TN is 269 miles.

Question: What is the distance from Paris to Reno?LLM response #1:

Action: Check Google Maps for the straight-line distance from Paris, TX to Reno, TX.You can see from this pair that the LLM looked at the first prompt and understood that instead of throwing its hands up and saying it doesn’t have internet access, it should actually produce an Action: line on the pattern of the example in the prompt. So that’s exactly what it did.

Example continued: The second prompt-response pair

Our agent program got the above “Action:...” text back from the LLM, and it used a bit of computer code to turn that language into a Google Maps API call that asks for the distance from Paris, TX to Reno, TX.

The agent then used another bit of code to turn the results of that API call into an English-language “Observation:...” line that contains some facts about the distance between the two towns.

Finally, the agent takes that Action and Observation pair and appends them to the original prompt as follows.

Prompt #2 with context:

Question: What is the distance from Memphis to Chattanooga?

Thought: Chattanooga is in TN, and there's a city named Memphis nearby. So we're looking at nearby cities in the same state.

Action: Check Google Maps for the straight-line distance from Memphis, TN to Chattanooga, TN.

Observation: Google Maps says Chattanooga, TN and Mephis, TN are separated by 269 miles.

Answer: The total straight line flight distance from Memphis, TN to Chattanooga, TN is 269 miles.

Question: What is the distance from Paris to Reno?

Action: Check Google Maps for the straight-line distance from Paris, TX to Reno, TX.

Observation: According to Google Maps, the straight-line distance from Paris, TX to Reno, TX is 6 miles.

LLM response #2:

With the full context from the new and improved prompt, the LLM can answer the original question properly:

Answer: The total straight line flight distance from Paris, TX to Reno, TX is 6 miles. Nerd stuff: TDD programmers already know how to do all this

🚲 Just for fun, let’s go back and revisit the Gherkin sample from much earlier in this post. You remember it, right? It’s the natural-language-looking text that’s both human-readable and machine-readable:

Example: First use of the day

Given I last used the app yesterday

When I use the app

Then I am notified about overdue tasksLet’s rewrite it:

Example: First use of the day

Thought: I last used the app yesterday

Action: I use the app

Observation: I am notified about overdue tasksIf you’re a practitioner of test-driven development (TDD), then this action and observation stuff is old hat. Even if you didn’t use Cucumber and were just writing unit tests, you’re still used to thinking in exactly these terms.

So I and my fellow TDD practitioners (represent!) are already way ahead of the game, because we’re already accustomed to thinking primarily within the agent paradigm. We have whole libraries for specifying agentic behavior and for backing those descriptions with some code that makes them work.

Clarification: I don’t want to give non-devs the impression that everyone uses Cucumber — this is far from the truth. In fact, in the ruby community, it fell out of fashion years ago in favor of just writing more (and more elaborate) integration tests in rspec. But I think it could be in for a revival in the agent era.

If you’re not TDD-pilled, it’s worth learning a bit about how teams program with Cucumber, because you’ll start to see how well all of this seems to fit with the agent framework. Here’s a brief overview, which you can skip if you want, but I personally am convinced there’s something to this.

Cucumber crash course, agent edition

Given that a Gherkin acceptance test file has a specific format and uses specific keywords in a specific way, software engineers can actually feed that file directly into a test suite that will use pattern-matching and some custom code (step definitions) to check all the file’s conditions (“Given X…”), actions (“When X happens…”), and outcomes (“Then Y happens…”).

A software engineer, then, can a Gherkin file from the product manager and then do the following two steps:

1️⃣ Write a set of step definition files that translate the above, human-readable lines into executable code blocks that can run in order to test the main program.

For example, the bit of ruby code to implement part of the example might look something like this:

Given("I last used the app {time}") do |time|

@time = time

end

When("I use the app") do

TaskApp.start()

@notifications = TaskApp.get_notifications_since(@time)

# It's been like three years since I wrote any ruby

# so just go with it and don't judge.

end

Then("I am notified about overdue tasks") do

expect(@notifications).not_to be_empty

end

2️⃣ Write the actual application code that enables the steps in the step definitions file to execute without error.

So for the above step definition to execute without any failures, the programmers need to correctly implement two functions in the main application code:

TaskApp.start()TaskApp.get_notifications_since()

🤔 Y’know, step definitions look suspiciously like “tools” and parsers from some of the new agent frameworks I’ve been looking at. This being the case, I hypothesize that when we have LLMs that can perform the following translations, it’s all over:

Goal => Gherkin acceptance test file

Gherkin => step definition file

Step definition => application code

There’s a lot of the above type code out there in repos that we could use to train LLMs, so I don’t think an LLM that can do all of the above steps is that big of a leap from where we are now.

Furthermore, it strikes me that the regnant RLHF pattern, where humans provide input/output example pairs to tune LLMs toward specific types of output, could be applied to each of the three kinds of transformation listed here so that an LLM gets really good at each kind.

🚀 This is doable and we should do it.

Tasks and priorities

If you’ve made it this far, then congratulations: you have observed the future of software in action. The following very expensive loop is going run again and again and will power the next trillion dollars of software value:

llm_response = submit_prompt_to_llm(full_prompt)

while is_action?(llm_response) do

observation = perform_action(llm_response)

full_prompt = full_prompt + llm_response + observation

llm_response = submit_prompt_to_llm(full_prompt)

endBut there are some missing pieces in this very basic but powerful loop.

The first, and most obvious shortcoming of our agent loop is it’s all just actions. But a bunch of actions is not an actual plan. Normally, a plan is an ordered sequence of tasks, which are individually composed of sequences of actions.

Consider the following goal and plan, where the plan has been broken down into tasks and subtasks:

Goal: Pay my 2023 taxes

Plan:

Task: Calculate my taxes for 2023

Task: Collect my tax docs

Task: Total up my earnings

Task: Total up my deductions

Task: Calculate my AGI

Task: Caculate my amount due

Task: Pay my 2023 taxes

Task: Check my bank account to ensure I have enough to pay my amount due

Task: Use an online tax service to pay my amount due↔️ You can see that for an agent to be truly useful, it has to have a way of making two transformations:

Goal => tasks

Task => actions

Luckily, LLMs can already do from a goal to a list of tasks. I’m confident that an even more detailed form of the plan above would not be difficult for GPT-4 to produce when properly prompted.

And thanks to the ReAct pattern, LLMs can also go from a task description to a sequence of actions.

🔧 But right now, both of these transformations require a fair bit of interactive prompt tinkering to be really successful. The big questions are:

Can some agent software, with no supervision, help a naive user generate just the right prompt to get a high-quality, implementable plan out of GPT-4?

Can the agent software automatically decompose each task into a set of productive actions that make real progress toward that task’s completion?

These things are TBD right now because they depend heavily on the design of the agent — how well does it recognize and handle sad paths and unproductive plans? — and the prompting skills that are baked into it.

We’re also still learning the best ways to reliably prompt the models to make these kinds of detailed plans, but already it seems clear that as they improve and as token windows get bigger, this step of converting some very ambitious goals to all the way to a detailed sequence of productive, relevant actions seems within reach.

⛓️ The taxes example highlights two other things we’re missing from our agent picture so far: dependencies and priorities. A few of the tasks above can be completed in parallel with other tasks. But many of the tasks depend on the output of other tasks, so they have to be done in a certain order — in software terms, we’d say the actions have to be chained together.

It will be up to some part of the agent — probably the LLM, but software can help a lot with this — to find dependencies among the tasks and build chains out of them. There will probably be popular task chains that get cached and re-used, with only some of the parameters being swapped out for specific runs.

The agent will also have to analyze the tasks and chains well enough to set priorities so that more important tasks (tasks that may take a long time, or tasks with many dependencies) execute earlier.

👯♀️ To return again to our discussion of the legacy software paradigm, with its product managers, feature specifications, and programmers, this business of sequencing and prioritizing work is a critical thing that capable PMs and teams are able to do. I think LLMs are probably a very long way from really being able to do this sort of work. (Like, a couple of quarters, maybe? But really, who knows.)

For tasks and task chains that can be worked on in parallel, the popular agent libraries already spawn sub-agents to do specific types of work. This parallelism is powerful but expensive and will have to be carefully managed to keep inference costs from spiraling or tasks from failing because inference budget limits are hit.

🥊 Finally, it’s also the case that, per Mike Tyson, “everyone has a plan until they get punched in the mouth.” So an agent will have to adapt its plan on-the-fly, orchestrating the mix of tasks, priorities, and sub-agents to fit new observations and changing conditions. There are many hints that this type of flexibility is possible within the current ML paradigm, but we’re going to have to work at figuring out how to unlock it and exploit it, exactly the way we had to work at discovering the power of the ReAct pattern.

Up next: more resources, projects, & future directions

This post is already almost twice as long as what I normally write, so I’m going to cut it off here. There’s a ton more to say about agents, though, but that’ll have to wait for another installment.

With what I’ve written above, most programmers should now be equipped to jump into the “Resources” section below to understand what they’re looking at. They should also be able to get started working with some of these tools or (even better) building their own.

⏩ In the next installment, I’d like to look in more detail at some of the specific projects. I haven’t even gotten into how any of the agent projects actually work, using vector stores as memory and talking to external APIs. I’ve mostly focused on the ReAct pattern and some higher-level considerations, but there are many prototype implementations that are worth looking at in more detail.

Further reading

How does GPT Obtain its Ability? Tracing Emergent Abilities of Language Models to their Sources

A simple Python implementation of the ReAct pattern for LLMs

The Anatomy of Autonomy: Why Agents are the next AI Killer App after ChatGPT

https://twitter.com/yoheinakajima/status/1642881722495954945

Appendix A: Agents are a lot like cloud computing

Let’s look at this issue of the cost of agents in a bit more detail, because it’s very important for thinking about the future of this software paradigm. This section will primarily be of interest to analysts, managers, and software types, so many of you will want to skip it.

Consider a hypothetical piece of very simple software implemented twice: once by a human using the legacy paradigm, and once by means of a simple text prompt to an LLM-powered agent. Here are some example execution runs under the two paradigms:

Legacy paradigm: 10 API calls to a mix of free and metered APIs = total $0.00001 of marginal cost for all ten calls.

Agent paradigm: 10 API calls to a mix of free and metered APIs + 20 API calls to OpenAI or some other LLM provider = total $1.20 of marginal cost for all ten calls.

I just made these cost numbers up, but they’re kind of ball-parkish and serve well enough to illustrate the point that the agent paradigm is massively more expensive per execution run.

But note that I used the term “marginal cost” in both examples above. In the legacy software paradigm, there’s a large up-front investment of money in terms of programmer compensation, and that sunk cost is amortized over billions or trillions of execution runs in the life of the software with each of those runs incurring a bit of marginal cost in electricity and fees.

Contrast this to the agent paradigm, where the sunk cost of initial development is dramatically lower —it could even be zero if there’s no development required and a generalized agent is able to accomplish the task based purely on a prompt. But every time a user uses the agent to carry out the task, the cost is material.

Legacy software also has significant ongoing maintenance costs that have to be factored into the equation. The agent paradigm’s maintenance costs are also potentially zero for the user.

The agent paradigm, then, is a form of metered execution very much like the cloud computing paradigm. Indeed, the tradeoffs of agent vs. legacy are almost identical to the well-known tradeoffs involved in cloud vs. on-premises.

With both the legacy paradigm and on-premises hosting, the stakeholder is committing to a large sunk cost and ongoing maintenance costs because she has modeled the ROI on that investment and is predicting a positive return. So the stakeholder is shouldering the risk of owning the resource in exchange for the reward of capturing all the ROI she’s going to get because her ongoing marginal cost of using the resource is so low.

With both the agent and cloud paradigms, the stakeholder is minimizing her risk by committing to zero (or near-zero) up-front sunk costs and zero maintenance costs. Instead, she’s taking on huge marginal costs of the kind that only make sense if she’s not going to be using the compute resource enough to make the legacy paradigm’s cost structure worth it.

So agent and cloud are alike in how they let users manage risk, and thinking of them in this way gives us some guidance on how the agent revolution will play out. I think we can surmise a few things from this:

The legacy paradigm will continue to find extensive use, and it’s doubtful that the agent paradigm will replace it to any meaningful degree. Rather, agents will augment legacy software in some cases, and in others will enable software to eat parts of the world that were previously off-limits to it because of risk/reward factors.

Agents will initially find more use in very niche scenarios where the user can shoulder most of the inference cost on self-owned hardware (a phone or laptop), or where the user really doesn’t want to engage a human for whatever reason.

The agent paradigm is a new form of linkage between human labor costs and ML inference costs. This means as inference costs get lower (and as the agent paradigm matures), more types of cheap human labor will get driven down in value. (See my article on ML as a deflation vector. Agents give more types of labor direct exposure to Moore’s Law’s deflationary effects.)

The type of labor exemplified by Mechanical Turk, Upwork, and other types of services where inexpensive tasks are farmed out to off-shore human assistance, is at most immediate risk from agents.