AI Content Generation, Part 2: Tasks And Models

A close look at the tasks and models behind the marketplace of AI-powered content apps.

This is Part 2 of an ongoing series on AI content generation — how and why it works, and how to use the new tools that are coming out. Part I lays a conceptual foundation for everything discussed in the present article, so be sure and read it if you missed it. Part 3 is a deep dive into Stable Diffusion. Part 4 is a look at what’s next for AI content generation. Subscribe now so you don’t miss any future installments!

If you’re building in any of the spaces I cover in this Substack, then you can use this form to tell me about it and I may be able to help you get connected with funding.

Despite how fast the machine learning space moves, you can navigate the rapidly shifting terrain if you know what kinds of problems ML researchers are racing to solve.

There’s a constellation of discrete tasks that researchers are trying to apply ML to, tasks where the success conditions are pretty well understood and where progress can be easily measured and communicated to potential users.

Large models are developed, refined, and trained to accomplish one or more of these tasks. They’re then released for public use, either in user-facing product form (Google), as an API (OpenAI), or as open-source software (Stability AI).

B2B and B2C software developers build user-facing apps on top of those models by calling APIs that give them access to the tasks the models can perform.

Not only are there many different markets for ML, but there are many different kinds of markets — and all of these markets will be powered by a much smaller number of discrete capabilities that ML researchers will enable. So if you understand the capabilities and the models, you’ll have a much easier time keeping up with the constant flow of new apps.

The bulk of this article, then, is an overview of ML tasks and models that’s aimed at orienting you to the space and giving you something to refer back to as you explore further on your own. I’ll start by breaking down some of the tasks in that figure above, then we’ll look at the model layer, and finally, I’ll end with pointers to resources for keeping up with the app churn.

Contents:

Machine learning tasks

Training vs. inputs

An aside about cognition

Interpreting images

Transforming and generating images

Interpreting text

Transforming and generating text

Getting to know the models

GPT-3

GPT-J

DALL-E 2

Stable Diffusion

Machine learning tasks

The sections below focus on machine learning tasks that deal with text and images. Once you’ve got these under your belt, it’s pretty easy to understand these same tasks when they’re translated to audio and video. The names and functions are recognizable across domains, so there’s not a lot of need to repeat the material.

There are some fundamental types of ML tasks we’ll encounter in both images and texts. In the previous article, I put these tasks into three main categories: generation, classification, and transformation. We can expand that task list, though, to make it a bit more granular and descriptive:

Classifying a whole thing, e.g., “this sentence expresses a negative feeling,” or “this is a photograph of a natural environment.”

Classifying part of a thing, e.g., “this word in this sentence is a noun,” or “this region of pixels contains a tree.”

Comparing two things to measure sameness/difference, e.g., “this caption does/doesn’t fit with this image.”

Extracting part of a thing, e.g., “here’s a sentence from this document that’s an answer to a question,” or “here are all the faces in this photograph.”

Transforming a thing, e.g., “here’s a version of your input text at a lower grade level,” or “here’s a version of your input photograph of Robert De Niro rendered as a My Little Pony character.”

Generating a thing, e.g., “here’s the text an informed writer might produce in response to your prompt, or “here’s the image a talented artist might paint in response to your prompt.”

Predicting a thing, e.g., “here’s the likely next word in this incomplete sentence you just typed,” or “here are the likely pixels that might fill in the gaps in your input image.

There’s plenty of slippage between the above categories, so it’s best not to get too hung up on putting different tasks into the “correct” one. For instance, text and image generation is actually a flavor of prediction; also, extraction depends on classification, and the distinction between “whole” and “part” can change with context. But generally speaking, the above is a good list of the kinds of things ML does.

We can also split the above list into two even more general categories:

Numbers 1-4 are about interpreting a piece of input to identify, label, classify, and separate things that are the same from things that are different.

Numbers 5-7 are about generating a new thing that shares desired similarities with the input thing.

It’s weird the way that you can kind of break down ML tasks into “reading vs. writing,” isn’t it? (Or, at least it’s weird to me.) And just like with reading and writing, the different task categories depend on one another in interesting ways.

Another point worth pondering — especially if you’re an AGI skeptic — is the way that for a human to demonstrate an understanding of some phenomenon, topic, text, art, vocational skill, or other object of study, we’d expect them to be able to do some amount of all of the above types of tasks in relation to that object. In other words, in the course of being formally evaluated as a student or apprentice in some area, you’re going to need to do a mix of all six of the above before you’re judged as having mastered the material.

Computers are getting very good at all of the above task types in isolation and in specific contexts. At some point soon, some system somewhere will be very good at all using all six of these task types together in general and novel contexts. Anyway, more on this topic in the “What’s Next?” post I’m working on.

Training vs. inputs

Some types of tasks you’ll encounter in ML will have more than one input. For instance, if you’re comparing and/or contrasting two things, you have to supply both of those things in order for the model to do its work. Or, you may have to supply some additional context along with your original input, e.g., for a question answering model, you’ll supply a question alongside a block of text from which the model can extract the relevant answer.

The difference between what models are trained on in the training phase, vs. what inputs we’re asking them to work with in actual use, is one of those things it can be hard to keep straight in your head in your first encounters with machine learning.

When it comes to the models typically used in AI content generation, one way to keep the difference between inputs and training data straight is to remember the concept of latent space and to think about everything in terms of search.

Training is about building a mathematical representation of a set of relationships between concepts and information out there in the real world (whatever that is).

Inputs are about locating one or more regions in latent space and then asking:

For a single input we can ask: What information does this point in latent space represent, or what can we find in the vicinity of this point, or what’s opposite to it? (I.e., generation, transformation, and prediction tasks.)

For multiple inputs we can ask one or more of:

How far apart or close together are these points in latent space? (I.e., classification and extraction tasks.)

What’s at the midpoint between these points, or what’s adjacent to them? (I.e., generation, prediction, and transformation tasks.)

You can see in the list above that I’ve framed all six of the task types from the first section as searches of latent space for one or more points, and as I said above we humans use all of them together to demonstrate mastery of a domain of study or endeavor. Probably nothing, right?

An aside about cognition

I think the source of the aforementioned confusion when it comes to training vs. inputs is that we humans don’t really experience learning as a thing you do all at once and that’s separate from applying what you’ve learned. We do go through periods of intensive study in different domains, but these mainly just prepare us to be more efficient absorbers of new knowledge and insights when we actually set out to apply whatever we spent time studying for.

In this respect, the current generation of machine learning models are almost more like athletes than they are like scholars — they’re jocks, not nerds. They train and train and train to do a specific thing, then they go out and do the thing in a setting where their performance is measured. The faster and more accurately they do the thing they’ve trained for, the better we say they are. And the slower and sloppier they are at doing the thing, the more training we say they need.

Given that the Greek root of the words “scholar” and “school” is skohle, which means “leisure, spare time, idleness, ease” — basically the opposite of “training” — I don’t think we’ll have an artificially intelligent scholar until we’ve devised a way for the models to be bored in a productive way. At some point on the way to AGI, there will have to be a conversation that goes like:

Boss: “Why did this machine’s energy use just spike? I don’t see any inputs or outputs.”

Researcher: “I have no idea what it’s doing, or if anything concrete will come out of this session. Hopefully, it’s figuring something out and not just wasting electricity!”

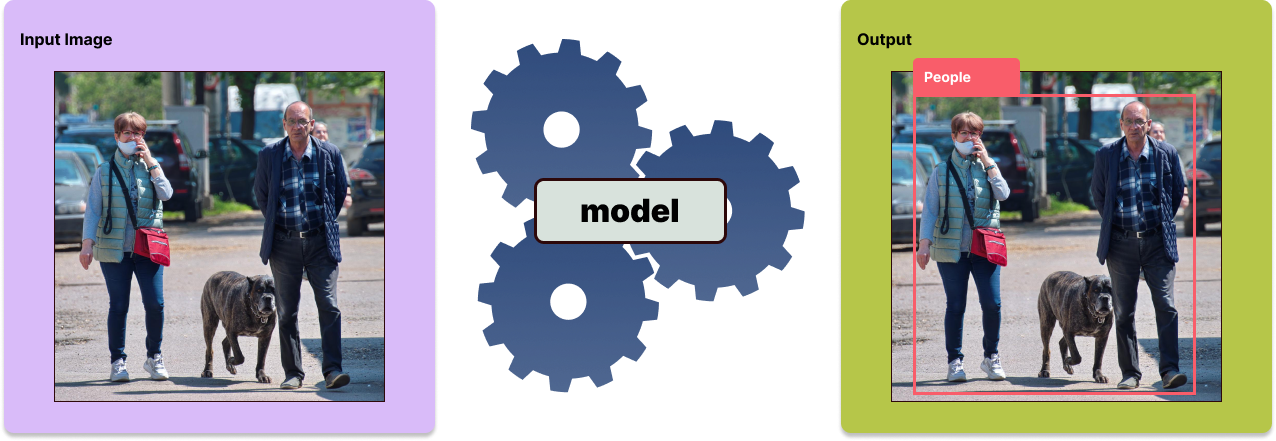

Interpreting images

At the root of all the things we do with machine learning and images — from smart Photoshop plug-ins to steering self-driving cars — are three basic functions, listed below in ascending order of how hard and specific they are:

Image classification

Object detection

Image segmentation

Image classification is the easiest to implement because you only need to train the model to tell what’s in the image. For example, we could pretty easily build a simple image classifier to sort the contents of our personal photo library into one of three buckets — animal, vegetable, or mineral — by training a neural network on photos tagged with one of the three categories.

Image classification use cases include:

Detecting tissue anomalies and other signs of ill health in medical images.

Locating geographic features in satellite imagery for agricultural or military purposes.

But what about photographs with more than one type of thing in them? For such images, models trained to do object detection can tell us about the different objects depicted in the image and even draw simple bounding boxes around them. You’d use object detection to break apart a street scene into cars, people, and landscape features, for purposes of doing surveillance or building a self-driving car.

Object detection use-cases include:

Labeling or tagging photographs in a personal photo library

Visual navigation system for a robot or self-driving vehicle

Generating text descriptions of the contents of images for the visually impaired

Image segmentation is essentially a finer-grained version of object detection, where instead of drawing crude bounding boxes around the objects in the image and then enumerating them, the model actually classifies all the pixels according to what type of object they belong to.

Image segmentation use-cases include:

Photo editing, where you want to select an object in order to remove or manipulate it.

Chroma keying (i.e., shooting video in front of a green screen, then swapping backgrounds).

In practice, different types of ML-powered content generation and manipulation tools will use one or more of these three capabilities, depending on the type of task you’re performing with them. It may not even be clear which capability is being used, since there’s plenty of overlap between each of these tasks.

Transforming and generating images

In the previous article, we covered the theory behind how AI image generation tools like Stable Diffusion work, and you learned that image generation and image-to-image transformation are simply two variants of the same functionality.

To recap, image generation models take in a text prompt and create a new image based on it by searching the model’s latent space.

Image generation use cases include:

Creating new artwork for articles, stories, and games from sketches and existing artwork

Generation of keyframes for animation or other types of video

The best way to understand image-to-image generation is to watch someone do it:

Image-to-image use cases include:

Quickly turning crude drawings into fully realized images

“Spinning” a copyrighted (and even watermarked) image so that you get a royalty-free version.

Interpreting text

Alongside computer vision, natural language processing (NLP) represents one of the oldest families of problems in AI. Early AI pioneers initially thought many NLP tasks would be solved pretty quickly, but they vastly underestimated how hard language is to work with and how closely tied to Big Questions of consciousness and mind language problems are.

Note: Many of the example images and animated GIFs in the sections below were generated using Hugging Face, which is a great site for exploring different models and tasks.

Text classification is about analyzing a text and assigning relevant labels to it, exactly like image classification does with images. Social media platforms use text classification to determine if a post constitutes harassment or spam. Financial firms use text classification to perform sentiment analysis on news reports and social media posts, to anticipate how the market might respond to a new development like a product release or an earnings report.

Sentence similarity is a task where the model tries to determine how similar (and different) a given input sentence is from one or more other input sentences.

Transforming and generating text

Text transformation and generation works largely the same way that image transformation and generation work, and in some cases the same model can actually be trained to work with either type of input (i.e., pixels or words).

Text generation is what you’re here for, no doubt — the automated creation of original texts from short prompts.

Translating from one language to another is one of the earliest uses for NLP, and it’s still a very hard problem with plenty of parts left to solve. I go into some of the lasting difficulties in this article on machine translation. In a nutshell, translation has so many subtleties that are so intimately tied to deep problems in knowledge, language, and consciousness that we’ll probably always be working on it in some form or another.

Summarization is an area where language models have made great progress. Texts can be capably summarized by current-gen models, their core concepts and ideas distilled down into a readable text that’s targeted at a specific difficulty level.

Text-to-text generation is essentially the same as the image-to-image capability described above. Such models are already finding use as “article spinners,” where “spinning” is SEO-speak for rewriting someone else’s content so that it smells original to Google.

We can also use text-to-text generation to change the difficulty level of the language used in a piece of text, for instance, to make it more accessible to the cognitively impaired. But who are we kidding with this — the vast majority of use cases are going to involve article spinning for SEO and marketing.

Conversational models are currently in widespread use in chatbots of the kind that pop up when you visit a site for the first time and ask you if you need any help. The performance of these chat models will continue to improve, especially on narrowly defined domain-specific topics, so that they’ll ultimately replace quite a bit of the helpdesk and support workload in many markets.

Question answering is often used alongside conversational models in a product support context, but it’s a separate task. Specifically, this task involves examining a corpus and extracting question/answer pairs. A conversational chatbot can then use the output of these models to recognize questions and surface the correct answer for users.

Named entity recognition is the textual version of the object detection described above, where words in a block of input text are tagged with terms like “person,” “animal,” “time,” “corporation,” etc. Many models are so good at NER now that they can exceed human performance.

Getting to know the models

One of the reasons the main section in the middle of that diagram at the beginning of this article — the part showing the models themselves — is so centralized relative to the regions on either side of it, is that it costs a lot of money to train a large model with billions of parameters. (Note: That middle part is by no means a comprehensive list of models; I just put some of the main ones in there.)

Because of the way performance in ML seems to scale with the number of parameters and the size of the dataset, bigger is going to be better for the foreseeable future. This means a handful of massive, expensive models with a constrained set of capabilities will lie behind the massive, constantly churning ecosystem of user-facing apps.

To be clear: it’s expensive to train these models, but once they’re trained then they’re an order of magnitude less expensive to run. Take Stable Diffusion as an example. It cost about $600K to train it, but you can run it on a decent gaming PC or a good laptop.

So while a certain amount of centralization is inevitable when it comes to training, using the trained models and even tweaking the weights to incorporate new training data is increasingly something anyone with a good GPU can do. But for now, we’re still in the era of model centralization.

GPT-3

GPT-3 was the first large language model (LLM) to really shock many AI skeptics by delivering on some of the machine learning hype in an undeniable way. My own “shocked by GPT-3” story is that when it was announced, I read a really solid essay on how it worked and some philosophical issues with it, and it was some six months before I learned that that essay had itself been written by GPT-3.

When GPT-3’s predecessor, GPT-2, was released in early 2019, the technology was still obviously deficient but also pretty promising. Watching GPT-2 go was kind of like watching a dog ride a surfboard — surprising and cute, but that’s mostly it. Based on our experience with GPT-2, I don’t think many of us were really prepared for the magnitude of the jump in output quality that GPT-3 was capable of. It was almost like a state change had taken place, like the jump from water to ice — something qualitatively different had happened.

So what was GPT-3’s big innovation that somehow hoisted OpenAI’s LLMs from the realm of “yeah, neat trick” to “omg it’s a better writer than many undergrads”? In a word, scale. The OpenAI team took the basic architecture they’d been working with since the original GPT and turned up the number of parameters from GPT-2’s 1.5 billion to a whopping 175 billion.

There are four different versions of GPT-3 available, each at a different size and cost level and codenamed after different historical figures. In ascending order of size and cost to use, these versions are:

GPT-3 Ada at 2.7 billion parameters

GPT-3 Babbage at 6.7 billion parameters

GPT-3 Curie at 13 billion parameters

GPT-3 Davinci at 175 billion parameters

The vast majority of the popular AI-powered content apps out there, in every vertical from marketing to SEO to content planning, are using GPT-3 under the hood. If you see an app doing text generation from prompts, it’s usually safe to assume it’s GPT-3-powered unless you find out otherwise. A specialized version of GPT-3, Codex, is also behind the popular code generation tool GitHub Copilot.

Stats:

Company: OpenAI

Launched: June 2020

Parameters: 175 billion

Training datasets: Common Crawl, Wikipedia, proprietary datasets

Capabilities:

Text generation

Summarization

Named entity recognition

Translation

Read more:

GPT-J

GPT-J is an alternative to GPT-3 that’s important because it’s open-source. Because access to GPT-3 is tightly controlled by OpenAI (this is done in the name of “safety”), we can expect to see more such open alternatives in the future.

This model works about as well as earlier, smaller versions of GPT-3, but still can’t quite keep up with the leading edge, yet.

Stats:

Company: EleutherAI

Launched: June 2021

Parameters: 6 billion

Training dataset: The Pile

Capabilities:

Text generation

Summarization

Named entity recognition

Translation

Code generation

DALL-E 2

DALL-E 2 is the latest and most advanced AI image generation model from OpenAI. Right now, its performance on prompts is leading the pack, but of course, that could change by the time you read this. (Have I mentioned this space moves fast?)

DALL-E 2’s predecessor, the 12-billion parameter DALL-E, was built on essentially the same architecture as GPT-3, but it was trained on a combination of pixels and text. DALL-E 2 introduced a different, more complex architecture that enabled it to reduce the number of parameters while increasing performance.

Internally, DALL-E 2 actually makes use of two main components:

CLIP: A fairly straightforward comparative model that was actually introduced at the same time as the original DALL-E and that’s trained to do the simple job of comparing an input image with an input text caption and measuring how well the image and caption fit with one another.

unCLIP: A module consisting of a pair of submodels that work together to effectively pull stored image attributes back out of CLIP’s latent space and turn them into images:

A prior model that’s trained to convert an input text prompt to an image location in the CLIP model’s latent space.

A decoder model that uses a technique called diffusion to convert into an image the point in CLIP’s latent space that the prior model located.

When you use DALL-E 2 for image generation, you enter a text prompt into a text encoder that then feeds the encoded text into the prior model. The prior finds an image embedding that’s located near the text prompt in the CLIP model’s latent space (an embedding is a sequence of numbers that are the coordinates for a location in latent space). This image embedding is fed into the decoder model, which produces an output image.

If you didn’t understand any of the above, I’ll try to boil it down using terms introduced in the previous article.

The CLIP model has a latent space that represents abstract knowledge about text and images, and the relationships between different features and concepts — just like we talked about in the previous article. In fact, imagine that CLIP’s latent space is a kind of brain matter that contains learned knowledge about concepts like “dog,” “swimsuit,” “birthday cake,” “above,” “below,” “wearing,” and so on, and can relate those concepts to one another both visually and textually (because it was trained to relate images to captions).

DALL-E 2’s special trick is that has a submodel (the decoder) that’s trained to take as input a set of coordinates in the fully trained CLIP model’s latent space and turn those coordinates into pictures that represent that region of CLIP’s latent space in some meaningful way.

So in a way, DALL-E 2 consists of one model (CLIP) that knows how images and words fit together and understands the relationships among abstract concepts drawn from both texts and images, and a second, “mind-reading” pair of models (unCLIP) that can use a text prompt to turn a region of that original model’s “memory” into an image that more or less visually represents what’s in that region that was located by the text prompt.

One advantage of training DALL-E 2 to generate images from the image embeddings of a pre-trained model, is that such embeddings are a lot less computationally expensive to train on than the compressed pixel representations used by DALL-E. This boost in efficiency is probably a big reason why DALL-E 2 is rumored to have almost 3.5 times fewer parameters than DALL-E yet gives better results than its larger predecessor.

Stats:

Company: OpenAI

Launched: January 2021

Parameters: Speculated to be 3.5 billion, though no numbers have been released. (Compare 12 billion for DALL-E.)

Training dataset: Proprietary

Capabilities:

Image generation from a text prompt.

Image transformation (i.e., blending a pair of input images, or producing variations on an input image).

Inpainting (i.e., modifying specific features or aspects of an image based on a text prompt).

Read more:

Stable Diffusion

Stable Diffusion is a diffusion-based image generator that works on some of the same principles as DALL-E 2’s diffusion-based prior and decoder models described above, but its explicit goal is to democratize access to AI tools and enable an era of decentralized AI. (Much, much more to come in this newsletter on the topic of decentralized AI, so stay tuned.)

The Stability AI team has taken two key approaches with Stable Diffusion to further this decentralizing, democratizing agenda:

Stable Diffusion’s architecture makes it less expensive to train than some prior models like the original DALL-E. (I’m not sure it’s cheaper than DALL-E 2, though, but more on this below.)

Both the training data and the actual resulting model weights are open-source and freely available to the public, which means that not only can anyone run Stable Diffusion locally, but anyone can retrain it or tweak the weights by training it further on new data.

Stable Diffusion has some important similarities to what I’ve described above for DALL-E 2. It first trains an encoder on text-image pairs, then it trains a diffusion-based decoder model to turn image embeddings from the encoder into images. (As near as I can tell, and I’m still getting my mind around how these models work so I’m open to correction, Stable Diffusion is a lot like DALL-E 2 but without the prior model — i.e., if DALL-E 2 just trained the decoder submodel to work on the output from the text encoder.)

It’s also the case that Stable Diffusion was trained on a specific, art-focused subset of the open-source LAION database. This is why it’s so good at generating different types of artistic-looking output, but not quite as good as DALL-E 2 at photorealistic output.

Stable Diffusion’s open-source nature spurred immense controversy in some quarters when it was released. For whatever reason, people who couldn’t be bothered to get up in arms about the equally open-source text generator GPT-J got pretty exercised about the potential for Stable Diffusion to put artists out of work, empower perverts, perpetuate societal biases, and so on and so forth.

I’ll spend a lot more time on Stable Diffusion, both its architecture and the surrounding controversy, in later articles and podcasts, so stay tuned for all that.

Because of Stable Diffusion’s open-source nature, I expect to see its functionality integrated into a lot of apps in the coming weeks and months. As diffusion-powered, locally-run apps and features proliferate, this will drive a fresh wave of demand for CPU and GPU power on the client.

Stats:

Company: Stability AI

Launched: August 2022

Parameters: 890 million

Trained on: An art-focused subset of LAION 5b

Capabilities:

Image generation from a text prompt.

Image transformation (i.e., blending a pair of input images, or producing variations on an input image).

Inpainting (i.e., modifying specific features or aspects of an image based on a text prompt).

Read more: