Creative AI Is Here, Whether You Believe In It Or Not

AI content generation is rapidly passing from the novelty phase to the utility phase

Stable Diffusion has been out for about two months, and already it’s moving from the novelty phase to the utility phase. What I mean by this is, some of the generations on apps like PlaygroundAI are now being used not as standalone digital art but as inputs to other creative projects.

Here’s a user who’s using PlaygroundAI to design jewelry:

This user employed img2img to generate textures for race cars in a racing game:

Another user is prototyping home exteriors with the tool:

The following concept art for shoes doens’t look any more insane than a lot of what I see on people’s feet these days:

All the examples above feature image generation, but AI text generation models have been around even longer, and you’re already definitely reading their output and being influenced by them. This dramatic market growth and value creation, much of which is taking place without the full awareness of the general public, is the reason Jasper.ai just unicorned at a $1.5 billion valuation less than a year after launch.

If you doubt that large language models (LLMs) like GPT-3 have already revolutionized the content industry, read this thread:

The bottom line: There’s much debate right now about whether we can describe the output of generative machine learning models as “creative,” but now that AI content generation is entering its utility phase this debate has become purely academic. On a practical level, you will purchase objects made of both bits and atoms that were designed by an AI, and you either won’t know or won’t care that the “creative” mind behind these designs is a giant wad of math.

Wrong mental models drive bad intuitions

Having admitted that this debate is purely academic, I’m nonetheless going to jump into it here because I’m a nerd who likes this kind of thing. I also think the AI creativity debate can be instructive for non-technical people, insofar as I’m convinced that much of the controversy is driven by bad intuitions about what these models are actually doing when they “make” something.

To put my cards on the table up-front, my own personal instinct is to say that what generative models do is the same as or very similar to what humans do when they create. I could certainly be talked out of this, but I’m naturally inclined to think this way for two main reasons:

We actually have no idea what humans are doing when they create, &

What generative models do — searching around in the space of possibilities, guided towards a particular point in it by a combination of new inputs and memories of things they’ve seen in the past — sounds a lot like what many human creatives claim they’re doing.

The implication I take from #1 above, is that unless you have a working model of human creativity, how can you insist that what generative models are doing is not what people are also doing? You can’t, but that doesn’t stop people from making such claims. It’s very odd, but I do understand why people do this because I know that such claims are rooted not in a mental model of what creative humans are actually doing but in a mental model of what machines are doing.

But this mental model is wrong when it comes to ML, so let’s look closer at it.

Storage-and-retrieval vs. memory-and-recall

In computing, it’s common to draw a technical distinction between “memory” and “storage.”

Memory: RAM or some other volatile, short-term storage that’s close to the processor. Whatever is in memory disappears when the computer is turned off.

Storage: A hard disk, or some other larger pool of non-volatile storage that’s slow, distant from the processor, and can retail data even when the computer is powered down.

Despite their different names, and especially despite the fact that one of them has the unfortunate name of “memory” (it’s nothing like human memory), the two types of computer storage above do the same thing: they store the same files in the same configurations of bits so that those files can be retrieved and transformed.

Computer systems, then, work on a storage-and-retrieval paradigm, where files are stored losslessly, and then retrieved so that some work can be done on them.

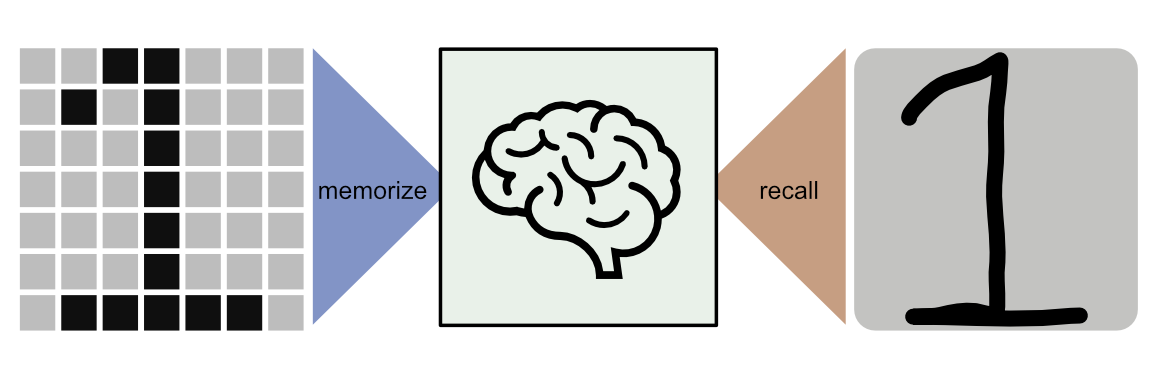

Human memory has two traits that make it very different from a computer’s “memory” or “storage”, both of which are related to the fact that it does not work on the above-described storage-and-retrieval paradigm:

Human memory is lossy, in that it only stores an approximation of the input information, not a bit-for-bit exact copy, and

Human memory is generative, in that when you use it consciously in the act of remembering, you’re actively reconstructing or regenerating certain selected aspects of the original experience in your mind’s eye, or verbally, or in some other form.

To be sure, our minds are certainly able to memorize many types of symbolic information in exact form — lines in a movie, poems, songs, phone numbers, addresses — but this type of activity requires some dedication. The medium of human memory has to be pressed into such a service, almost as if it weren’t originally designed for lossless data storage but can nonetheless do it in a pinch.

What we naturally remember is a blend of lower-level inputs — texture, sound, sensation, taste — and higher-order, derived abstractions — concepts, elements, features, and sequences. We can use a memory that’s full of such lower- and higher-level elements to reconstruct a particular experience, or to generate a novel experience.

The act of remembering also itself yet another experience, and one that itself can be remembered later (i.e., a recollection of a powerful memory arising suddenly, maybe due to some smell or sound).

The point: To summon a memory is a generative act — we don’t retrieve a static configuration of bits so much as we create a novel experience out of some prior set of impressions and sensations that were derived from the original sensory inputs.

Deep neural networks don’t store & retrieve — they memorize & recall

I laid out the above differences between computing’s storage-and-retrieval paradigm and human memory’s memorize-and-recall paradigm because what ML models do when they’re being trained on inputs — called “training” or “memorization” in the literature — is not file storage. And what such models do when they use that input for classification or generation — called “inference” in the literature — is not file retrieval or really anything like file retrieval.

Deep neural networks are designed architecturally to look like human brains — like networks of neurons. And as improbable and miraculous as this sounds, these networks actually do seem to represent internally the kinds of lower- and higher-order objects that brains have in their memories — textures, shapes, specific people, “person” as a class, etc.

Many tech-savvy people know ML isn’t doing storage-and-retrieval, but they don’t really know just how much my description above of human memory is a better fit for models than the file-based paradigm they’re used to when they use computers.

Indeed, I had written some character recognition neural networks in undergrad, but more recently when I took this topic back up I still had to actually had to read a handful of papers on deep learning before it sunk in that the networks really do encode concepts, instead of storing compressed or otherwise transformed versions of files that can be retrieved by giving the network a particular input combination.

Even more wild is the fact that the networks encode information about the relationships between abstract concepts — they know that noses go on faces and that cars go on roads.

The storage-and-retrieval paradigm continues to have such a powerful hold on everyone’s mind for at least two reasons:

We use this paradigm every day constantly when we’re in front of a screen. It’s so intrinsic to our experience of “using a computer” that we don’t even need to think about it explicitly in the way earlier computing pioneers did. We just do it, constantly.

The fact that these statistical, mathematical constructs that are superficially modeled on the brain in a kind of simplistic way, do actually encode high-level concepts and relate them to one another, is really weird and unexpected even to experts. ML people didn’t expect these constructs to be this good at this very humanlike kind of memory-and-recall activity. You have to see it to believe it, and most of us just haven’t truly seen it.

But once you throw out the storage-and-retrieval paradigm entirely and embrace the more humanlike memory-and-recall paradigm, many of the things that AI boosters are saying will probably happen suddenly become a lot less outlandish.

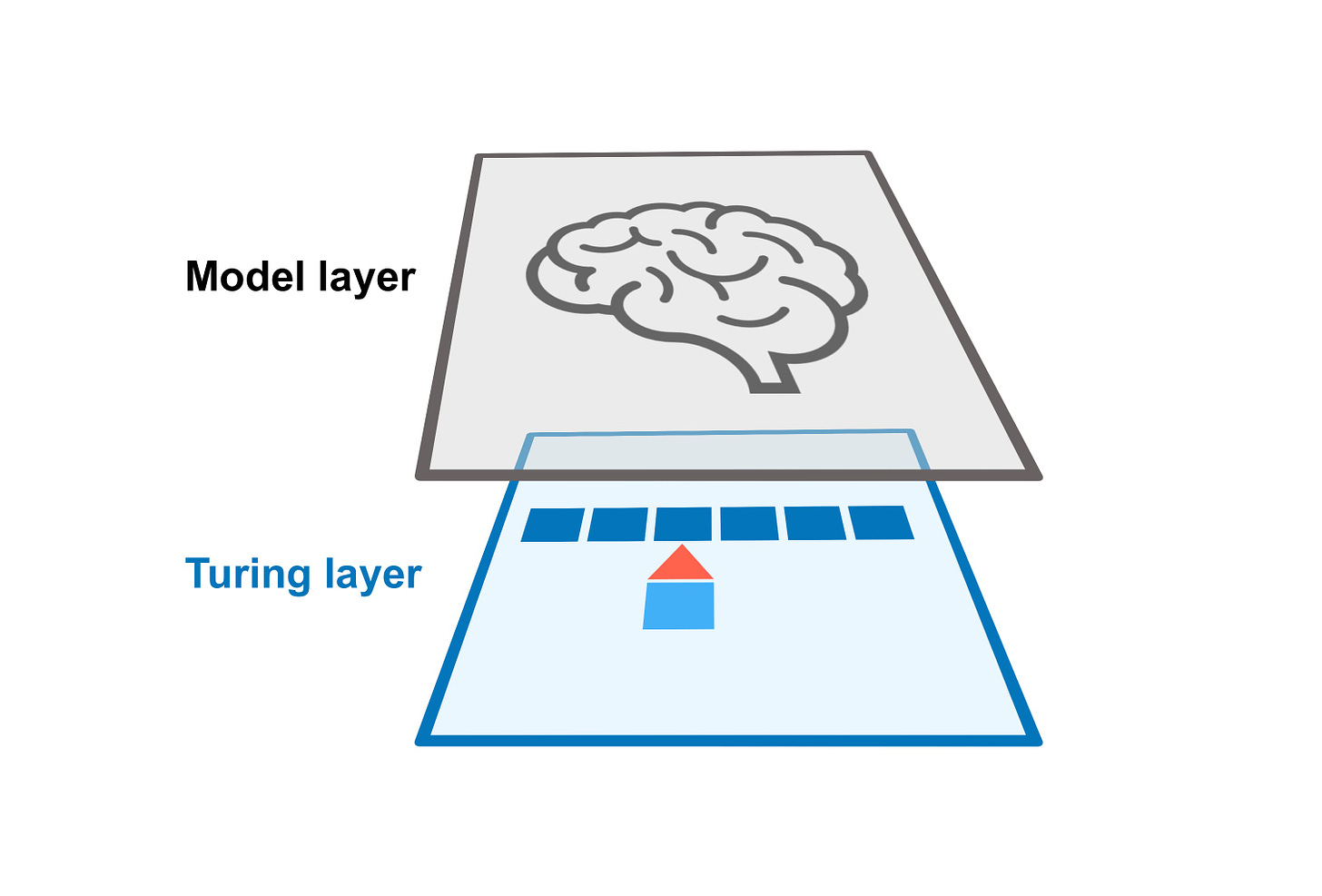

To be clear: At a lower level of abstraction, ML models obviously do use storage-and-retrieval because they run on computers. A set of model weights is a file in the classic sense, and it has to be loaded from the disk into memory to be used. Similarly, when I use a model like GPT-3 or Stable Diffusion, there’s a computer behind the curtain that’s running a program.

The machine learning model, though, is a way to take the classic Turing machine and use it to implement a kind of memory that is stochastic and very non-Turing-like. You can think of ML as a layer of abstraction that sits between us and the computer’s storage-and-retrieval hardware and lets us do more brain-like things on a Turing substrate.

Read more:

Creativity and remembering

I don’t personally have anything like a fully developed theory of creativity. But I can put into two general buckets many theories of creativity:

Platonic: The abstract forms of things are pre-existent in some non-sensible realm outside spacetime, and “creativity” amounts to locating a new thing in the space of abstractions that already meaningfully exist. This was the paradigm I used in my first article on AI content generation.

Aristotelian: The forms of things are inferred from material exemplars, and “creativity” amounts to combining in novel ways concrete things that exist in the sensible realm of experience.

I actually don’t think it’s worthwhile to insist that one or the other of the above is “true” or “correct” — I’m not even sure what it would even mean to make such a claim. But rather, regardless of which of these understandings of creativity you’re inclined to, one can make a pretty defensible (in my opinion) argument that generative ML models are being “creative” in that way.

Indeed, my introductory article framed AI content generation’s creative acts in Platonic terms, but with this article’s discussion of memory and recall, I’ve tried to lay the groundwork for understanding creativity in Aristotelian terms. The models are doing something like inferring forms — the form of circle, the form of chair, the form of color, the form of red — from their training data during a training phase, and when you generate with them you’re asking them to combine memorized forms in novel ways to produce novel (and hopefully interesting or useful) configurations of information.

Conclusion

I love debates about memory, creativity, and human experience, and I’m going to keep an open mind on these issues as I continue to learn. This article is just a first draft of my thinking on this topic, shared in the interest of furthering the discussion around what exactly generative models are doing and how.

But as I said in the intro, this technology has now firmly entered its utility phase — people are actually using it to create commercially viable things with both bits and atoms. And you increasingly will not be able to tell when ML did the creative work for the things you’re buying.

The only practical implications of a sophisticated theory of AI creativity that are left for anyone to fuss over are in the legal realm. We’re still trying to understand what it means for our antiquated, creaky concept of “intellectual property” that the signature style of a particular artist can be used without that artist’s participation, knowledge, or consent. The legal battles are just getting started.

I actually think even this legal debate is pointless and destructive, and we should just either abolish most of our IP laws or severely limit them. But until I’m voted king of the world, philosophical debates about creativity will probably get a hearing in the courts.

Wonderful read. Here's a paper that I think best models human creativity, pre-dating the recent boom in AI and ML:

https://arxiv.org/abs/1610.02484

Thank you for the illustrations and explanations. The best AI explanation for laymen I have found!