Here's How Generative AI Can Fix The News

One way or the other, we're on the verge of a media revolution thanks to generative AI. Here are specific, detailed proposals for how LLMs can make the media dramatically better.

Housekeeping note: I’m still working on the follow-up to my most recent post on RLHF — basically a close look at the preference model that acts as a proxy for the tastes and morals of a group of model humans. But instead of pushing that out, today, I want to pause for a brief rant about a topic I know well: the news.

People think AI is going to destroy what’s left of the media. But I honestly think it could play a role in saving the media, and with it maybe even our civilization. What I describe in this piece won’t fix the bad incentives that are destroying our ability to do collective sense-making in the age of outrage, but it would open up the news reporting process to more people and improve the quality of the stories we’re able to tell given the current time and budget constraints the media operates under.

I’ve found myself trying to make this “AI can save the news” pitch to people IRL, so here’s my attempt to get most of the ideas down in one place for future reference.

➡️➡️➡️ If you like this post & you’re on Substack, please consider restacking it. 🙏

There is a lot that AI can do for the teams of humans who make a living by helping the public maintain current, reasonably useful mental models of various corners of our collective reality. I’m talking, of course, about the profession formerly known as “journalism,” a profession with origins in the era of print and broadcast that is lately in such sorry shape that it may be best if we just move on from it entirely and start thinking more abstractly in terms of a labor market for real-time collaborative sense-making labor.

There’s so much real-time sense-making tooling that needs to be built on top of the current generation of AI models, and I feel like I know what some of this tooling should look like, so in this piece, I’m going to describe it. If I could snap my fingers, I would have all of the things in this piece, and then I’d build a next-generation story factory out of them.

📜 In this post, I’ll walk you through the process of how our legacy sense-making apparatus gets its material (i.e. the “news story”) out to the public. Then I’ll explain how a little soft prompting and some API calls can turn that whole process into something that’s far more accessible to non-specialists and more responsive to the needs of the digital era. Finally, I’ll beg for people to put front-ends over these API calls so I can give them my money and start using this.

If you decide to build something based on any of the ideas in this piece, please get in touch with me. I may be working on some of it myself, so it’s possible we could collaborate. Or, I could potentially connect you with investment capital and potential cofounders and/or employees.

How the media currently makes stories

Before I can get into what an AI-powered sense-making hub (a “newsroom” in legacy speak) might look like, I need to provide some background knowledge on the process of taking a story from a pitch or assignment all the way to a finished product.

🗞️ What follows is the core process that most newsrooms take a story through, with the steps listed in roughly chronological order. Sometimes this process plays out over weeks or months, or sometimes over mere tens of minutes. Some parts of this are skipped, or maybe they’re rolled into other parts. But in general, news production in the age of the internet looks a lot like this:

Reporting: Gathering facts, working sources, understanding what’s going on and framing that as a story.

Drafting: Assembling the output of step 1 into a structured blob of text and images that readers can consume and that publishers can sell ads or subscriptions against.

Content edits: Direction from an editor about what should and shouldn’t be in the draft, how the draft should be organized, the angle and positioning, voice, and other high-level feedback. A story will often move back and forth between this step and the draft step as it gets iteratively refined.

Line edits: Rewriting some of the language in the draft so that it flows better.

Copy edits: Fixing typos, misspellings, and other minor errors.

Art: The main element added in this step is the hero image (or feature image) — that big image at the top of the article that’s featured in the social promo and that makes people click. But many articles will have other art, like other images for specific sub-headings.

Production: Adding pull quotes, sidebars, special content units like spec/feature lists, and other formatting and visual elements.

Headline and excerpt: Every news org you’ve ever heard of has a dedicated process of some type for generating clickable headlines and excerpts that go in OpenGraph descriptions on social media, a process that may or may not even involve the reporter who wrote the story. (If a news org doesn’t take this seriously enough to have resources dedicated to it, then that’s at least one reason you haven’t heard of them.)

Scheduling: The story has to run at a certain time, depending on the news flow that day and what else is on the calendar. Timing is extremely critical for all stories. Nailing a window of a few hours can make the difference between a viral grand slam and something nobody read.

Promotion: The author has to know the story went live so she can promote it on her socials, and there may be people mentioned in the story who would want to promote it. There may also be people on the team who have lots of karma in some online venues like Reddit or Hacker News where they can get the story some exposure, or maybe they’re in Facebook groups or whatever.

As we’ll see below, there are many places in this storytelling process where a few API calls to an LLM could be a game-changer.

Remixing the storytelling process with the CHAT stack

If you haven’t read my article that describes the new software paradigm that LLMs enable, you should stop and read it immediately. I can’t really recap any of that material in this piece, but I do have to presume you have at least some familiarity with it. So go ahead and check it out:

The CHAT Stack, GPT-4, And The Near-Term Future Of Software

The story so far: GPT-4 was announced a few hours ago, and I’m sure you can find good coverage of what the model can do in your outlet of choice. I’ll link to a few resources at the end of this article, and you can explore from there. In this post, I’m going to skip all the usual feeds-and-speeds coverage and try to place the announcement in the context …

We could apply the CHAT stack described in that piece to the storytelling process described above in something like the following manner:

Brain dump: A reporter works on a story and files either written or voice notes (the latter would be transcribed by AI) describing what new facts he has learned, along with any relevant context he can think of. In other words, this is a brain dump of everything that he thinks is important for the story.

Context dump: A reporting platform would use the brain dump, along with possibly a written pitch giving an overview of the story, and combine it with a bunch of other contextual material that I’ll describe in a moment.

Drafting: The platform places into the LLM’s token window all the material in the previous steps. It also adds a number of other context objects that give the LLM guidance on format, style, voice, and the like. The output of this is a draft.

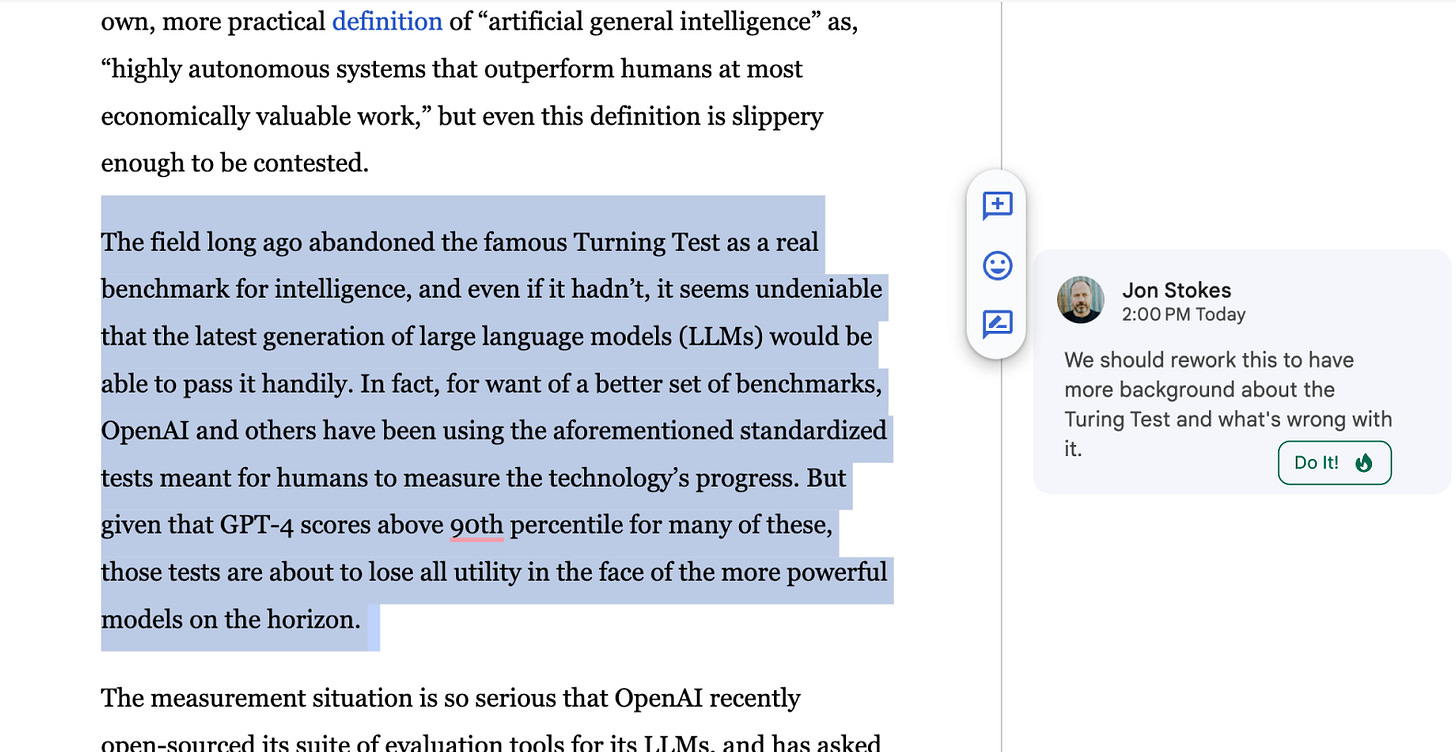

Revisions: The human editor and reporter work together to revise the draft in a collaborative, LLM-powered editor. They highlight portions of the draft that need revision and add comments that act as prompts for the model to suggest revised text for that highlighted part. When they get revisions they like, they accept them and the model puts them in the draft.

Art: The model suggests places in the draft that could benefit from art, and uses the surrounding text to generate art options for the humans to choose from.

Headline and excerpt: The model suggests options for the headline and excerpt (or “hed” and “dek” in news lingo), maybe even ranked and scored by the amount of click juice the model thinks those options have.

🤔 If you scroll back up and look at my list of the steps a story goes through, you can probably spot even more obvious places where ML could have an impact than I’ve called out here. But I want to focus on the parts above because these seem to me to be the ones where LLMs could have an immediate, dramatic, positive impact on the quality of the stories about the world that we all consume via our feeds.

I’ll expand on some of the above list items in the following sections.

The brain dump

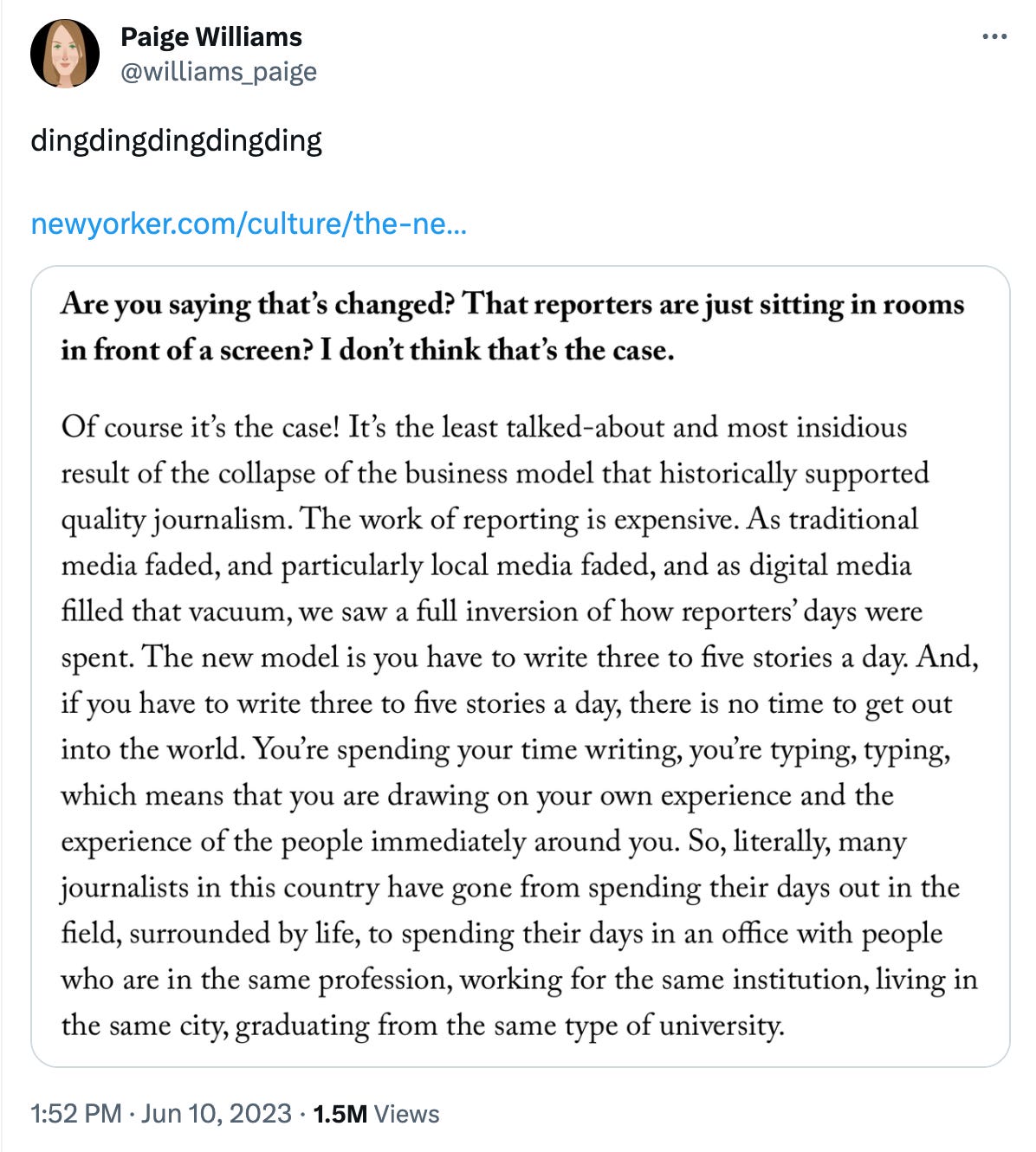

One of the bits of old-school media knowledge that has been lost over the past two decades, is that in the bygone era of print many great reporters at newspapers and magazines were terrible at the craft of writing. They’d either relay a set of facts over the telephone to an “editor” who was going to actually write the piece, or they’d hand in a mess of a draft that had to be completely rewritten.

🦺 I personally came into the business at the tail end of the era when newspaper reporting was still kind of a blue-collar job in some places, at least in terms of the pay and the kinds of people who worked these gigs. When I started writing about tech in the late ’90s, I was able to infer that there were many legacy media types who were bad at writing because, for most of the first decade and a half of my career, I’d get “thank you” emails from grateful editors who were pleasantly surprised at what good shape my drafts were in.

These nice emails stopped a few years ago, and I think that was because of two developments that are relevant to our discussion:

The people who submit work to most outlets are now a pretty culturally uniform set who hail from good schools and can grind out good copy, even if that copy doesn’t actually say much.

Most outlets have just quit editing and are just publishing drafts that have barely been cleaned up. Seriously. You can submit copy to really big web outlets that still have TKs in them when they go live on the site. (Ask me how I know this.)

Neither one of these developments is positive for news consumers.

First, we were all better off when a reporter was someone with some hustle, deep connections in a community, and an ability to sniff out and piece together stories — especially stories that powerful people don’t want told. People who have those qualities should be able to be reporters regardless of whether they can even spell, much less reliably manifest 800 words in a classic inverse pyramid with a clear nut graf and a memorable kicker. It would be better for all of us if reporting turned back into the type of work that people of a wide range of backgrounds, education levels, values, and language proficiencies can do.

As for editors, if outlets aren’t going to pay people to edit — and believe me I get it, it’s grim out there — then maybe we can find some way to cheaply reintroduce a little of the lost polish back into the process. That would be a win for audiences.

🔧 LLMs can fix this.

An LLM could do much or all of the drafting and editing work that sits in between the ground-level legwork of reporting and the high-level strategic work of content edits. Indeed, it’s very easy to imagine that an LLM could turn a reporter’s verbally transmitted brain dump into a solid draft with a bit of context and clever prompting.

👉The equation for getting a high-quality draft of a story out should be: brain dump + context dump = draft.

Right now, already on this very day in 2023, currently deployed LLMs are already capable of taking us back to the era where a reporter can tell a story by narrating a brain dump into a microphone and then iteratively giving feedback on draft versions. There is truly no need for reporters to have writing chops anymore, and in fact, we’d probably all be far better off if most reporters were from the kinds of educational and cultural backgrounds that tend to produce unskillful writers.

The context dump

🧠→📰 Turning a brain dump into a finished story is about much more than machine transcription and naive next-token prediction. The best stories are able to place new information in a proper interpretive context. To that end, there are three categories of context tokens that we’ll want to combine with the brain dump in order to make this plan work:

Story background

Format examples

Style examples

Story background can come from three places:

Sources, links, Twitter threads, and similar that the writer and/or editor want to supply as relevant context for the article.

Documents that have been surfaced via a relevance search in some datastore like Chroma as described in my CHAT stack post. This is most likely going to be previous articles from the same publication on that topic, company, technology, geography, or whatever.

Parts of source documents that have been marked up as important to this particular story.

The first two types of background above should be self-explanatory — I’m basically talking about both manual and automated methods for telling the LLM what material is important for the story. On that third point, I want to be able to highlight the parts of a particular document that are most salient, so that the LLM knows “these bits are important, so find a way to work them into the output.”

Formatting examples give the LLM a sense of the structure of the type of article I’m trying to produce — an explainer, a product launch, a breaking news announcement, etc. These story formats are all pretty heavily formulaic at most shops, so having marked-up templates and examples for the LLM to work from should be easy.

Style examples are pieces of writing done in a certain voice or tone, probably mainly consisting of past work from the reporter who’s filing the story. Or, there may be examples of a house style that can be used for certain stories.

These three types of background can be assembled in a single interface and can guide the LLM’s generation of a draft from the brain dump material.

Drafting and revisions

📝 I can easily imagine a UI that has some sliders or dials for the various context token types that I can operate in real-time as the LLM runs and see their impact on the output. Maybe I’m dialing in more style tokens and checking the results, or dialing back the style and pulling in more background tokens, or maybe boosting the amount of formatting guidance in the mix. However it looks, the UI will need to support playing with options and iterative tweaking.

The UI will also need affordances to support token and cost management when filling the context window. I’ll want to be able to budget my tokens on-the-fly so that I know how much each type is contributing to filling the finite token window.

I’ll want a Google Docs-like real-time editor interface where I can collaborate with other writers and with the LLM. I want to be able to highlight some text, add a string of comments (maybe some back-and-forth with writers, editors, and pre-readers), and then turn those comments into changes in the file in an automated fashion.

Finally, the drafting engine should know what’s hot and how to include that material in a draft. See this article for more:

Why I'm A Better Editor Than GPT-4 (& Probably GPT-5)

🏆 There’s a particular thing I’m good at as a writer and editor, and it goes by different names. Sometimes I and my peers call it “zeitgeisting,” or maybe “vibe reading.” But whatever name it goes by, this ability is easy to describe: A good editor like myself can perform better than chance at spotting which stories and angles have viral potential and …

Art

I like PlaygroundAI.com a lot, but right now, its social aspects are a long way from adding up to the tool that I need to run a content shop with. I need to be able to generate and manage art assets, prompts, templates, and customized models in a collaborative fashion with role-based access controls, rich metadata, version histories, and the works.

🎨 In short, I need an art platform that lets a team do the following:

Generate assets collaboratively by tossing prompt variations back and forth in real time and commenting on the results. (I can do a little of this in Discord with Midjourney, but there’s no team billing, yet.)

Share and organize an internal library of generations, source images, prompts, filters, and customized checkpoint files.

Control who can see and do what internally based on roles. An admin should be able to add new model checkpoints or filters, while a freelance writer might only get access to certain proprietary filters or models.

Manage a budget by deciding how many generations can be done per story or per writer. I really do need visibility into and control over the platform resources my team is using to make image generations for articles.

The right CMS could plug into this art platform by auto-generating prompts in the right places in the article for relevant artwork and then rendering a few options for the team to look at right in the document view.

🔎 A preview function in an editor with the right integrations would support the ability to preview how the article would look on the site with different image generations in it, even going so far as letting me generate new options from within the preview interface and see the page change.

Production

Stories need to be laid out, with all of the art appropriately sized and positioned, pull quotes added to break up long blocks of text, subheadings and bullet lists used appropriately, and so on. There’s just a ton of cleanup and tweaking that has to be done in order to match the story’s presentation to some house visual style and to optimize it for engagement.

A lot of this production work can and should be automated by LLMs based on prompting. There are tools like Framer that can turn a text prompt into a fully realized web page layout, so I can easily imagine tools that take prompts like “educate all the quotes,” “check all the block quotes for source links,” “make sure all the links in the piece work and are correct,” “add a features list widget by pulling specs from this press kit I just uploaded,” “check the photos for attribution,” and turn them into staged changes that can be accepted or rejected after a brief preview or diff screen.

➡️ Most of the production tasks I can think of could actually be reduced to a single prompt: “Go through the production checklist in Notion and make sure everything’s in order.”

Promotion

Many of the context tokens used in the reporting and editing phase — background, style, current materials like Twitter threads and sources — can and should be used as inputs in the promotion step.

✍️ A properly tuned promotion engine could go back to the brain dump and context dump to produce novel summaries of the source article suited for Twitter threads, Reddit and FB posts, email blasts, and the like.

I could even see the model taking what it knows about my followers and what they’re currently tweeting about, and optimizing a suggested Twitter promo thread for maximum viral potential in the current nanosecond on my TL.

Closing the loop

📈 At every step of the process I’ve described here, an integrated suite of real-time storytelling tools should be using traffic data, click data, heat map data, and so on, to learn what’s working and what isn’t, and then that information can be fed back into the storytelling process at every step.

I want the context token results to improve as the tool I’m using to gather and store context learns about my audience.

I want the drafting engine to know what’s hot on my TL right now, so it can throw in the kinds of color that give stories viral juice.

The art generator should draw on historical click-through data when suggesting prompts, filters, and models for the current piece.

The production tool should know from past heatmap and scroll metrics what kinds of formatting are working best at the moment for which layout elements.

I could probably keep filling out this bullet list, but a whole marketplace of LLM-powered tools and platforms could do an even better job of dreaming up ways to merge metrics into every stage of the storytelling process.

We can and should build this

What I’ve described here is not a single news platform. Rather, it’s a composable ecosystem of AI-based products for editing, production, art, and promotion. There are multiple startups in the above sections — I think it would be a mistake to try to roll all of this into one monolith.

If it does end up as a monolith, though, probably “Replit, but for the future of news” would be the one-line pitch.

🙋♂️ I truly believe a set of tools like I’ve described here would make “the news” more democratic by bringing this critical sense-making and storytelling work within reach of many more people who could do it on a higher level of quality and on a smaller scale than anything the rapidly collapsing legacy news business could imagine. The stories will be of higher quality and people who want to read them will pay for them.

We can and should build this stuff. It’s time to quit fear-mongering about AI doom and hand-wringing about the death of journalism, and just create what’s next. Who’s with me?

I’m looking for partners on my project. www.briefer.news

I’m aggregating and summarizing, and looking to create meta summaries by region and subject.

Interested?

Biomant@protonmail.com

There was a page made about fixing AI with journalism recently noting the market opportunity for mainstream news to regain the public's trust, along with other ways to aid the news media like making local journalism more efficient and with "pair journalism" pairing AI with journalists akin to "pair programming". Just an excerpt on the market need to fix a broken product:

https://societyandai.com//p/fix-journalism-using-ai

'A study by Gallup and the Knight Foundation found that in 2020 only 26% of Americans reported a favorable opinion of the news media, and that they were very concerned about the rising level of political bias. In the 1970s around 70% of Americans trusted the news media “a great deal” or a “fair amount”, which dropped to 34% this year, with one study reporting US trust in news media was at the bottom of the 46 countries studied. The U.S. Census Bureau estimated that newspaper publisher’s revenue fell 52% from 2002 to 2020 due to factors like the internet and dissatisfaction with the product.

A journalist explained in a Washington Post column that she stopped reading news, noting that research shows she was not alone in her choice. News media in this country is widely viewed as providing a flawed product in general. Reuters Institute reported that 42% of Americans either sometimes or often actively avoid the news, higher than 30 other countries with media that manage to better attract customers. In most industries poor consumer satisfaction leads companies to improve their products to avoid losing market share. When they do not do so quickly enough, new competitors arise to seize the market opening with better products.

An entrepreneur who was a pioneer of the early commercial internet and is now a venture capitalist, Marc Andreessen, observed, the news industry has not behaved like most rational industries: “This is precisely what the existing media industry is not doing; the product is now virtually indistinguishable by publisher, and most media companies are suffering financially in exactly the way you’d expect..” The news industry collectively has not figured out how to respond to obvious incentives to improve their products. '