How Big Content Will Win The Generative AI Wars

As with all things nowadays, not the courts or congress but an unnamed team of faceless, unaccountable mods will decide what billions of people can or can't see, do, share, and create.

In a recent article on AI content generation, I suggested that the courts will have a lot to say in the coming years about the intersection of generative AI and copyright laws. But now that I’ve had more time to ponder the issue, I’ve come to the conclusion that laws and courts will have only the most indirect impact on the future of generative AI.

Why? Because there’s a powerful technical and institutional apparatus that we’ve all come to accept as having the final word on what we can and cannot do/share/see/say online, and it’s not the legal system.

Rather, decisions about what billions of users can do on a handful of large tech platforms are left to the “trust and safety” teams inside the bowels of Big Tech. Furthermore, these moderation decisions are made under the framework of risk mitigation, which amounts to mitigating two types of risks:

Risks to users from SPAM, malware, harassment, alleged disinformation, and other types of digital content that might cause “harms.”

Risks to powerful vested interests from IP law violations both real and totally, illegally, pants-on-fire fabricated.

In short, the courts and legislature will not decide the fate of AI-generated content online — the mods will. And the mods will decide this new issue because we already let the mods decide every other issue that matters for our online lives, now.

I think the generative AI wars that are already kicking off will go this way, and that what’s legal and what isn’t will matter relatively little in the end, because I’ve already seen this particular movie before.

The gun debate gives a preview of what’s coming

America’s gun debate — especially in the area of 3D-printed guns — provides a preview of how all of this is going to play out. (Subscribers to this newsletter may or may not be familiar with my past work on the gun rights issue, and my involvement in a no-culture-war-bs gun rights org called Open Source Defense.)

Whatever you think about guns, I hope you can bracket that for the moment and just focus on the relevant points about moderators vs. the law, which I will unpack after a brief review of the great 3D-printed gun de-platforming of 2018.

I want to draw your attention to the time that Reddit and Facebook both banned a specific domain name for hosting CAD files that were 100% legal and had not been outlawed or otherwise censored or banned by the DOJ, the courts, or any other branch of our government. They also banned posting news articles about the domain name and about their ban of said domain name.

I should clarify a few facts about this incident because they matter a lot to my larger points about AI content generation and what the platforms are likely to do.

First, the news reports from the time stating that these CAD files for 3D printed guns had been declared illegal by the DOJ, were 100% false.

The files themselves were not illegal to host or possess, and the DOJ specifically prohibited only a single domain (defcad.org) from hosting them as part of action against 3D printed gun maker Defense Distributed.

Furthermore, manufacturing a firearm in your home from parts you find at Home Depot, or by 3D printing some parts, is and always has been totally legal in the US. There are no federal laws against making homemade guns, and for nearly all of US history, this has been true at the state and local levels, as well.

In the wake of the “ghost gun” furor that started up in 2016 or so, a handful of states and municipalities eventually passed laws to either ban or require registration of homemade guns that have no serial number, but only a handful. In the vast majority of the US, you can make a simple slam-fire shotgun with some pipe and a nail without breaking any federal, state, or local law.

So to recap, the digital CAD files that both Reddit and Facebook banned (and banned any domain hosting them, and also banned posting any links to news articles about their own bans) had the following characteristics:

They were totally legal to host or possess.

The activity they enabled, i.e. homemade gun manufacture, was totally legal.

They were just CAD files for 3D printers — in other words, they were computer code.

No state or federal entity had to issue any official letter, at least that was publicly available, declaring that those files were illegal and/or should be censored.

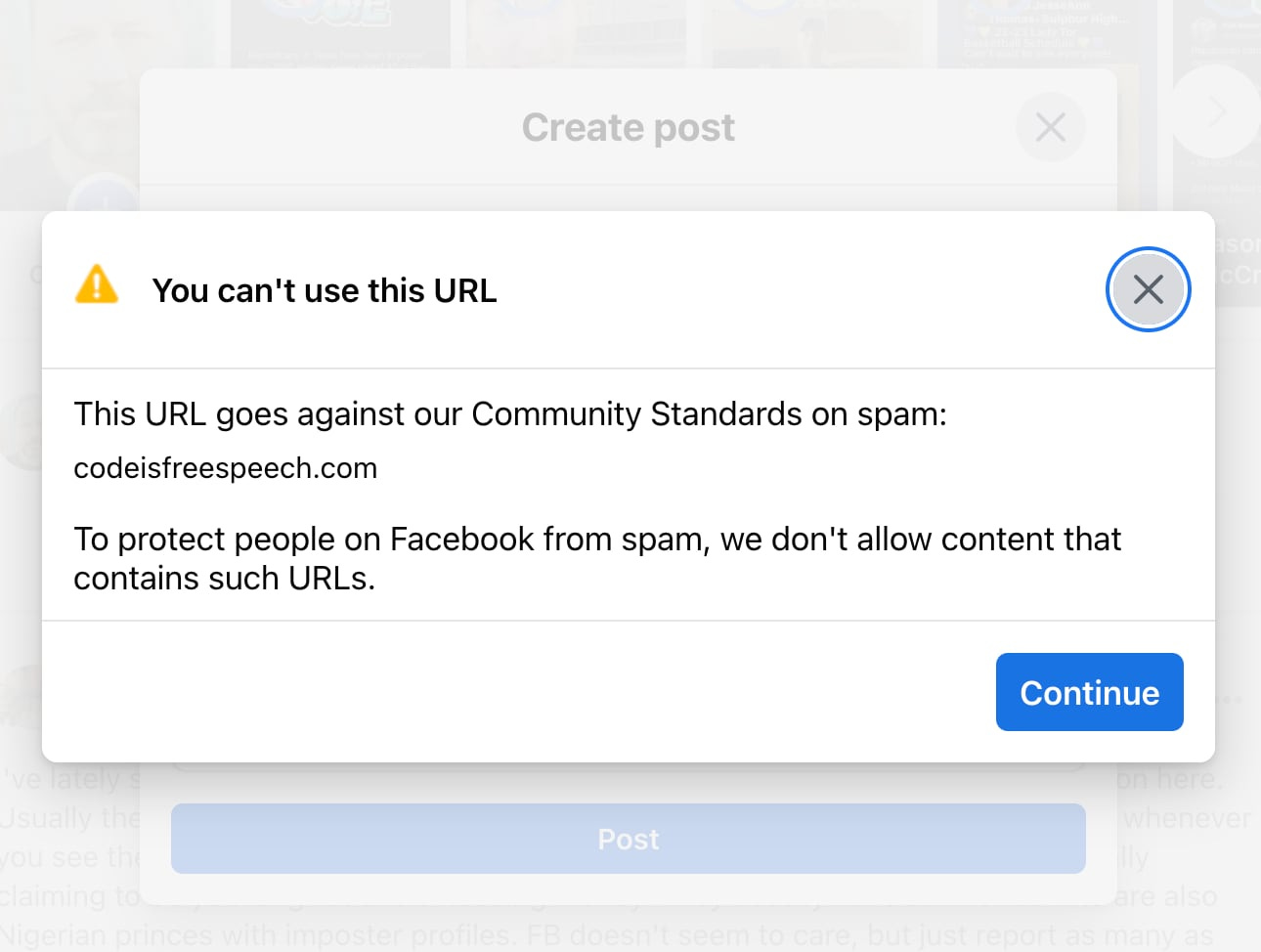

Despite the above, you still can’t type the domain for codeisfreespeech.com into Facebook. Go ahead and try it.

I haven’t tried again recently with Reddit, but I’d be surprised if they’ve removed their ban on it.

From CAD files to fan art

What’s most likely to happen with AI-generated art — especially art that makes use of copyrighted material (characters, settings, stories, etc.) — is that it’s going to get the same treatment that the aforementioned CAD files got. IP holders in Hollywood and elsewhere will start sending takedown notices for art like the Spider-Man art that features in this recent post of mine, and despite the fact that all this material falls under “Fair Use” it’ll get removed.

Centralized tech platforms will also screen for such images and text in an automated fashion, certainly using AI, and will “nope!” anyone who tries to post such material on them.

I suspect it won’t be as difficult to do such automated blocking of copyrighted images as you might think, given that such images can be passed into diffusion models like DALL-E and Stable Diffusion to generate “captions” that describe what’s in them (this is essentially image-to-text, instead of text-to-image). It won’t be hard for Sony and Disney to develop model that will spot a Spider-Man character in an image and prevent a user from posting it unless that image or its hash also shows up in a database of assets that the IP holders have whitelisted for user sharing.

In short, present norms and trends indicate that the exact same “trust and safety” tools that currently prevent you from seeing links to 3D printed gun files, jihadi propaganda, child porn, and other content that’s either outright illegal or merely politically disfavored, will soon prevent you from seeing Stable Diffusion generations of Hollywood’s IP.

What I’m describing here will essentially be the end of fan art and fanfic. The IP holders have largely left fan art alone as long as the fans aren’t trying to profit from it, but AI content generation is going to force them to go scorched-earth against any and all unlicensed uses of their IP.

They won’t need to pass laws to do this kind of content suppression, because they’re already widely suppressing lawful content under the banner of protecting copyright owners from “theft” and ordinary users from “disinformation.” Big Tech will do whatever Big Content wants, and Big Content will want to crush fandoms.

Patent and copyright trolls will evolve

I have a draft post with extensive thoughts on the issue of style transfer and artists whose signature style is widely copied by users of generative models (Greg Rutkowski being the poster child for this phenomenon). But without going into any of that, on a practical level, it seems likely that artists like Rutkowski will pool their financial resources into collectives that work with the “style” equivalent of patent troll law firms whose main job is to send takedowns to Big Tech and suing smaller sites for hosting material they deem to be “infringing.”

It seems likely that a new generation of guilds or unions will try to join Big Content in policing Big Tech platforms for totally legal yet “offending” works. And again, I suspect Big Tech will cooperate rather than risk looking anti-creator or getting sued. It’ll be easier and more profitable just to take the fan art and other disputed content off the big platforms than to fight any of this.

What is to be done?

I suspect there are only two possible outcomes here:

We move rapidly to a censorship-proof peer-to-peer paradigm for most content hosting and messaging (where “messaging” very much includes social networking), or

We’ll suffer a total and complete shutdown of our ability to publish and share any derivations of popular IP that are more than simple, meme-style remixes of a large but ultimately limited pool of copyrighted assets that are whitelisted in some database.

We actually had a chance to go the P2P route two decades ago, but we blew it and ended up in the present predicament. As I wrote in a previous article on the topic of P2P vs. centralization:

My point: An entire alternate branch of the evolution of consumer internet hardware, software, and services was simply wiped out, like a meteor wiping out the mammals instead of the dinosaurs. Again, this didn’t happen because the experience of running your own file server sucked or required specialized expertise — it absolutely did not suck, and in fact, the nascent P2P ecosystem was quite painless from a user admin point-of-view. Rather, it happened because without a centralized authority that the RIAA could sue or the feds could threaten with prison, people would have just shared whatever the heck they wanted with whoever the heck they wanted, and we just couldn’t have that.

The technologies that sometimes go under the banner of “web3” or “crypto” have given us an opportunity to take another swing at building a creator-friendly, P2P ecosystem. I hope we make the most of that opportunity because if we blow it again, the future is the endless synthetic reboots and sequels I described in Spider-Man 2025.

"decisions about what billions of users can do on a handful of large tech platforms are left to the “trust and safety” teams inside the bowels of Big Tech".

It is in the nature of our capitalist model to privatize censorship and it is to the benefit of our politicians to avoid being tarred with the 'censorship' brush.

It is in the nature of China's model to publicize censorship, as they have done for centuries. They appoint their leading public intellectuals to run the program, publicize the rules he must follow, and encourage people to challenge unfair censorial decisions. That's why 80% of Chinese trust their media and why mass media are thriving.

Beijing's approach to AI uses the same carrot and stick approach: billions for AI startups; ten million high schooler grads this year have passed an AI course; one of their first exascale computers was designed exclusively for AI processing.

The price is a mix of 'golden shares' in companies; Party Secretaries in their C-Suites; access to their data, and the lead in writing legislation that attracts 66% support in Congress.

Xi has been known all his life as a strong finisher, and he chairs the committee to which guys who run programs like Ai Promotion report. Li Qiang, the new Prime Minister will ride herd on it, since he turning Shanghai into a booming technology hotbed.

Now, add all those factors together and you can see why AI Guru Kaifu Lee says China is already ahead of us in exploring and exploiting AI and the gap widens daily.

will hand

younger, more tech savvy than the boss,

\aking these elements together–because the Prime Minister