Segmentation faults: how machine learning trains us to appear insane to one another

The brainworms will come for us all

Imagine a world where the following things are true:

Big Tech platforms make money by micro-targeting ads to their users, so that ads that are more accurately and narrowly tailored are more valuable to platforms and advertisers than ads that are more general.

Following on the above, the more fine-grained segments you can slice your audience into, the better you can service the long tail of advertisers. So there’s an ad-driven market for audience segmentation that the platforms want to meet.

Advertising works, and in general, a human’s behaviors, preferences, values, and even basic tenets of their worldview can be modified by the media they’re exposed to.

Our society is getting more fragmented and polarized, as existing groups splinter apart online and new, often smaller groups and clusters form around different ideas, claims, worldviews, and identity characteristics.

I understand there are legitimate objections to each point above, but just go with it for a moment. Imagine that the above accurately describes our present social reality in 2021.

If I’m right, then it seems quite possible that the first items in the list above — i.e., tech platforms have created a lucrative advertising market for an atomized, segmented audience — is leading directly to the last item in the list — i.e, to our increasingly atomized, segmented public.

To make my case, I’d like to throw out two claims for people to think about over the course of this post:

The old broadcast media model created a well-documented market for mass consensus, so consensus is what we more or less had for as long as that model reigned; but I think the new social media model has created a market for division and splintering, with the result that division and splintering is now what we have more of.

Just like the physical technologies of broadcast media — centralized one-to-many distribution via local printing/shipping cartels and limited FCC licenses — were linked to the way that model constructed a market for a baseline level of social cohesion and public consensus on certain fundamentals, the new technologies of social media — many-to-many advertiser <=> audience segment relationships (i.e., the long tail) plus large machine learning models for audience classification and behavior modification — are linked to the way social media creates a market for social fragmentation.

Audience segmentation

If you buy ads on Facebook, Google, Twitter, or some other large or small tech platform, you get a set of tools that let you do audience segmentation — you can buy ads targeted at women in the market for a new car, or men who are fans of a particular TV show, or retirees who live the RV life, etc.

Here’s a screen-cap from a Salesforce doc with a great visual that illustrates how this works:

(I left some of the document’s text in at the bottom of this screen-cap, because it’s quite relevant for the rest of this post.)

From reading this document, and similar docs on audience segmentation, you get the impression that “segmentation” is purely a cataloging and sorting act — sort of like a botanist going out onto a plot of undeveloped land and cataloging and categorizing the different kinds of plants she finds there. And when an advertiser is using a tool to do this kind of segmentation, that impression is accurate — it is indeed just categorizing things that are already there.

But the question I’d like to raise is, did the platform that’s offering those segments for sale play an active role in actually creating the segments that it’s offering?

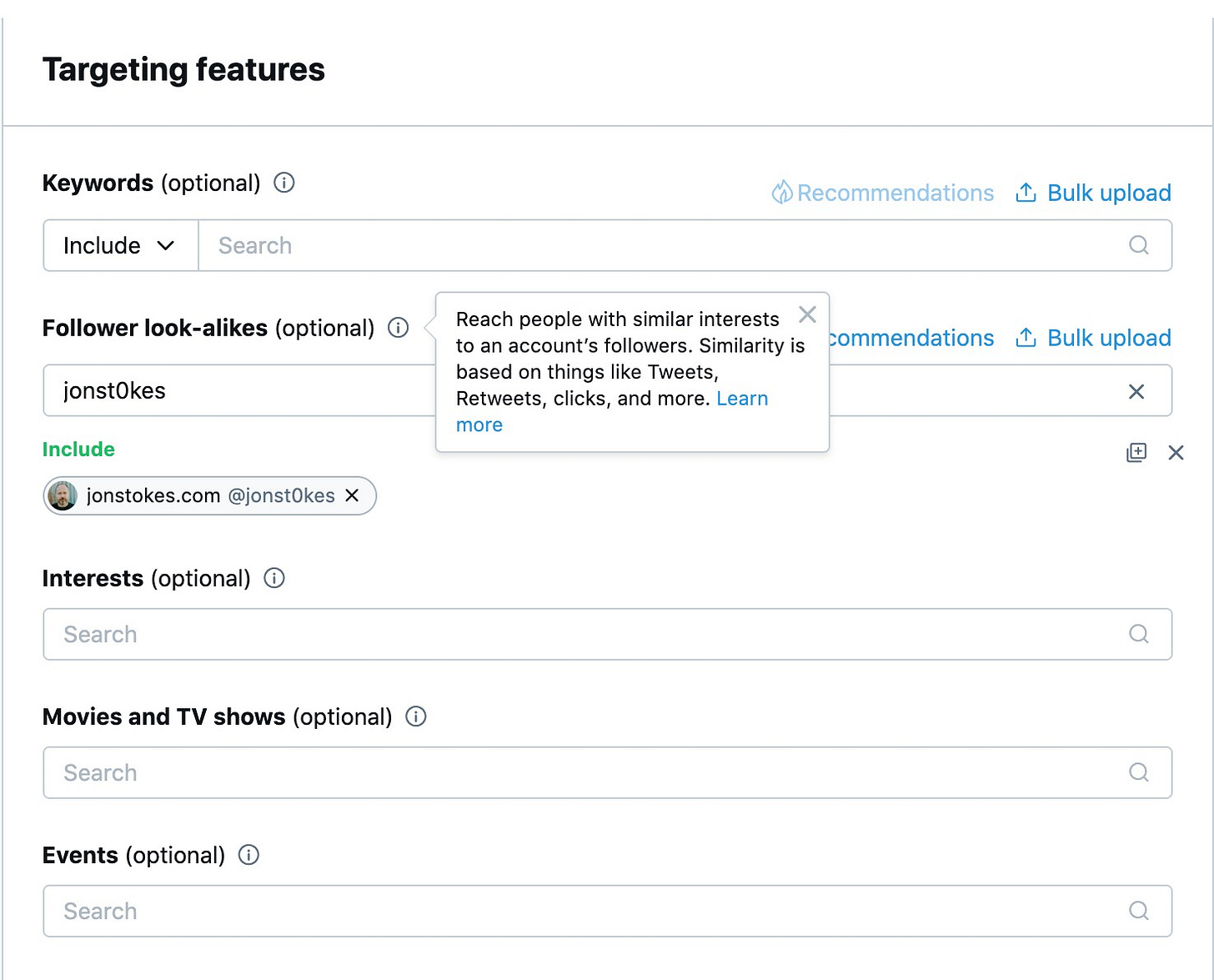

Take a look at this ad campaign creation screen from Twitter:

This tool lets me, an advertiser, microtarget ads by bundling together sets of attributes along predefined axes. I can also give Twitter an account handle — my own, for instance — and tell it to target people who are similar to my own followers.

Think about what it means that “followers of jonst0kes” is an audience segment. I am the person behind the jonst0kes handle, and therefore I’m the person who has created and defined that segment. I’m also constantly in a process of constructing that audience segment by interacting with the Twitter algorithm via tweets, retweets, replies, and favorites.

In fact, when I tweet out this very post you’re reading — a post that aims to influence how you think about media and how you understand and act in the world — I will literally be cooperating with Twitter’s algorithm to build the audience segment being offered for sale in the screen-cap above.

The bottom line: Via a combination of ML-enabled ad microtargeting, ML-automated feed curation, and gamified signaling (via my own Twitter activity), I and Twitter are training you to be a part of this audience segment that did not exist before I created it in the world.

Anyone can now invent a type of guy

The idea that advertising actively constructs an audience for a product is not at all new. In fact, the truism that great ads create identity clusters around brands and product — think “Ford guys” vs. “Chevy guys,” or “Mac person” vs. “PC person” — is one of the first things people learn when they stop being naive about ads and start to take them seriously.

The fact that effective ads can create market segments in the public where they did not exist before was, of course, true back in the old days of one-to-many broadcast media. But modern social media advertising introduces two new factors that fundamentally change the game:

Many-to-many advertiser/audience relationships, where one large platform can serve a very long tail of tiny advertisers by connecting them with a near-infinite supply of tiny audiences.

Machine learning on the advertiser-facing side that makes micro-targeting possible, and machine learning on the user-facing side that lets platforms actively construct tiny little “Apple guy vs. PC guy” micro-segments in the user base for sale to the advertisers.

Machine learning is the key piece that makes the first factor (many-to-many advertiser/audience relationships) possible on one centralized platform. Without ML, a platform couldn’t offer advertisers fine-grained analytics on a user base of hundreds of millions — segmentation would be a lot broader and therefore less valuable.

But here’s the key part that’s usually missed: ML is also used in the other direction to actively divide up the user base into monetizable silos. So not only are the users training the curation algorithm, but the curation algorithm is also training the users — and I think it’s doing so to the end of segmenting them.

In her now classic NYT op-ed on YouTube’s radicalization engine and her related TED talk, Zeynep Tufekci details how the mix of social media, machine learning, and targeted ads do three things:

Target users based on shockingly detailed and accurate inferences about their hidden preferences and mental states.

Motivate users to act in the world, e.g. going to vote, or buying a product.

Show users ever more extreme forms of content in order to keep them engaged and on the platform.

This is all great stuff, but to my knowledge, she never quite puts these pieces together to argue that radicalization she identifies in #3 above uses the motivating and attitude shaping power described in #2 to create the audience segments sold to advertisers in #1. (I actually would not be surprised if she has indeed put these pieces together in this way, but I’ve just not seen it. So hopefully, someone will correct me if I’ve missed it.)

In her TED talk, Zeynep tells the story of watching Trump videos on YouTube and being shown progressively more hardcore white supremacist content by the suggestion algorithm, and she also talks about a ProPublica investigation into how some platforms will sell white supremacist audience segments to advertisers.

What I’d add to this argument is that we should close the loop by considering how the radicalization funnel creates the white supremacists (or whatever other niche online subculture) that are then offered for sale to advertisers.

It’s this latter synthesis — that the radicalization funnel is an input into the audience segmentation, and indeed that the platform actively segments the audience — that I’m trying to think through in this post.

Machine learning and the two-way feedback loop

The core insight I’m leaning on here in order to make my claim that Big Tech uses ML to produce the very audience segments it sells to advertisers is one I’ve drawn directly from the AI ethics folks.

Going all the way back to Cathy O’Neil’s landmark expose of how financial models don’t just “model” racial divisions and inequality but actively reproduce them out in the world, a common theme in AI ethics discourse is that there exists a closed feedback loop between ML models and the world that these models are deployed into.

The diagram below is my attempt to illustrate this two-way feedback loop, where an ML model is modifying the world it’s interacting with even as it’s being trained by that same world.

A model that’s trained on a particular dataset will pick up that dataset’s categories and structure, and when it’s deployed at scale by a powerful entity — a bank, judicial system, hiring platform, etc. — will tend to perpetuate in the real world the same categories and structures it learned on that initial training run.

Social media is democratizing this ability to use ML to spread new divisions in society and grow new social formations. Anyone with a Twitter account and a credit card can now do a miniature version of what a Chase Bank’s risk modeling and lending tools do, i.e., identify a category of people in the world, and then deploy ML at scale to perpetuate and grow that category by inscribing it on fresh groups of people who are coming into contact with the system.

Wyrmism: a whimsical example

To return to my Twitter example above, imagine that the goal of my Twitter account was to create new adherents to my homebrew religion: Wyrmism. The basic tenets of Wyrmism (which is imaginary because I just made it up) are:

Dragons are awesome.

Fire-roasted goat is the best food.

Whenever we have disposable income, we buy WyrmCoin (which I have issued and own most of) and hodl it.

(There are a few other important tenets — some stuff about fleeing villagers and a prohibition against eating animals with scales — but the three above are the only ones important for our purposes.)

Now imagine that I’m constantly hustling Wyrmism (and WormCoin!) via this account, and I successfully attract followers and adherents so that I can sell them Wyrmist books, podcasts, events, and cruises.

Machine learning would help me create this audience segment of Wyrmists, and it would also help me identify them and market to them. Even if I couldn’t grow the Wyrmism community beyond, say, 1,000 people, I’d have used a credit card, a Twitter account, and machine learning deployed at scale to create a market segment that did not previously exist.

Other sellers could target that market segment, too. Anyone, in fact, could not just target it but join me in growing it.

Let’s say a mid-sized goat meat supplier comes across Wyrmism and realizes that this is a potentially huge market for its product. This company really wants Wyrmism to grow, because those nutters consume tons of fire-roasted goat. So the company goes all-in on Wyrmism with their modest ad budget, targeting Wyrmists on every social media platform.

Eventually, this injection of fresh money and energy grows Wyrmism’s ranks to 50,000 in the US alone. At that point, agribusiness giant Purina starts to notice that their goat feed sales are jumping. People are not only buying a lot of goat meat, but they’re buying and keeping their own goats. Purina has really deep pockets and smells a big opportunity.

Purina’s marketing team is savvy enough to do the one thing that will generate massive amounts of free publicity: they bid up WyrmCoin to the moon. Then Elon tweets about it, and we all spend a full week on Twitter where #WyrmCoin is the main trending topic on everyone’s feed.

Thanks to all the attention from the WyrmCoin bubble, Wyrmism is now so huge that even Tractor Supply is in on the action, and Home Depot, and a number of other brands. When National WyrmWeek comes around, they all change their social media logos to feature dragon wings...

This example is silly, but hopefully you get the idea. There were no Wyrmists before I invented them, and I couldn’t have grown that new audience segment into a real category in the world without a combination of machine learning, targeted advertising, and social media scale.

Now imagine this process being carried out millions of times a day, every day without stopping, and the kind of world it would produce. Then look out the window.

Anatomy of a brainworm

The market and ML-powered mechanics described above are a big reason why I think people are appearing to lose their minds on social media. This is the classic “filter bubble” argument, but I hope this piece has shed some light on how and why these bubbles form.

If I’m onto something with this post, and I think I am, then maybe “filter bubble” is the wrong term, and a better one is just “segment.” Someone who has been actively segmented by the platform, and who’s now in a segment that’s very far from your own, may well appear to you to be insane.

Again, this isn’t a thing they’ve done to themselves — they haven’t “self-radicalized.” Rather, they’ve been segmented by the feed in cooperation with the market.

Some of you may recognize the popular “brainworms” trope here, and wherein the person appears to have had their nervous system hijacked and no longer be in control of themselves. That’s essentially what I’m describing.

As for the larger social ramifications of this brainworm pandemic, I can’t give a better description than this classic BJ Campbell post from 2019: Not Your Imagination — Society Is in Fact Going Insane, and I Can Prove It. So go read BJ, and then start thinking about how we’re going to fight this.

Thanks for the link. I've been chewing on this problem since at least early 2018 and I think there's simply no way to fight it, other than to destroy the internet. Best I can come up with is to develop and propagate a new religion with a "thou shalt not use smart phones" commandment in it somewhere.

And further, I cannot see how this problem won't get worse, because of the feedback loops you're describing. Even if machine learning were not applied to the problem, human learning does a bang up job of creating it on it's own. See: reddit moderation practices. It's not just the algorithms. At it's root, this is just the ordinary mechanic of a culture interpreting its reality (as has been done for thousands of years) amplified and bifurcated and accelerated by a time factor of thousands. We've kind of always been doing this, but never this fast or this fractured.

As with much of my material, the solution as best as I can see is not to try and avoid Very Bad Things, it's to buy bullets and find some place to hide out for when the thing pops. Print out all those The Prepared articles and put them in a hardcopy binder.

This and another post you wrote about this gamification i really want you to be wrong. Its a pretty bleak vision. I hope that Cory Doctorow is right and that ad tech is actually not very effective. On the one hand there is evidence that many are using ad tech which makes one think it must be valuable, on the other hand Doctorow does seem to list some case studies that show it is not. I'm hoping he is right.

https://pluralistic.net/2021/01/04/how-to-truth/#adfraud

https://onezero.medium.com/how-to-destroy-surveillance-capitalism-8135e6744d59