The Story So Far: Generative AI Arrived Too Early

Humanity is struggling with sudden-onset AI creativity syndrome.

With this post, I’m trying a new thing where I do narrative-style updates on developments I’m tracking in the generative AI scene, with links and emojis sprinkled in. I’m tentatively calling it, “The Story So Far,” but we’ll see if that title sticks or if the post idea even sticks. It’ll all depend on the number of subscriptions and shares this installment generates.

So if you liked this and you’re not already a subscriber, then you can vote to see more of it by subscribing. And if you’re already getting this newsletter (either paid or free), then you can vote to see more by clicking the “Share” button at the bottom of this post and sharing it with friends. I’ll take new free and paid signups, and new shares, as an indicator that I should keep at this.

😕 People are having misgivings about the way AI is shaping up.

They’re having misgivings because the order of developments isn’t what they were led to expect by decades of science fiction. AI was supposed to come for the low-wage and the low-skilled jobs (fry cooks, janitors, shelf restockers) and then move up to more specialized jobs (truck drivers, delivery people, launderers, household help). The Roomba was supposed to be a little glimpse of the future of artificial intelligence — it just follows you around and quietly cleans up after you, and maybe it gets smarter over the years and moves up to fetching drinks and other light household chores.

But this is not what’s happening, is it?

Generative AI has been such a shock to everyone because it’s backward. Nobody, not even the AI researchers, saw this coming, so we’re all scrambling to figure out how to think about it. There aren’t really any classic stories or movies we can think with. It’s all out of order. Since Mary Shelley’s Frankenstein or Čapek’s R.U.R., we’ve been prepped for our creations to start out lowly in station and limited in intellect before, over the space of a few hundred years, presenting us with the kinds of dilemmas Commander Data posed to humanity in ST:TNG.

So people are struggling with the way the machines came for the wrong work, first.

💀 They’re wringing their hands about the possible death of creativity.

💡 They’re musing about the relationship between creativity and truth.

👨⚖️ They’re trying to sue over it.

✊ They’re organizing to fight the bots.

✋ They’re trying to debunk the hype.

People are trying in all these ways to come to an understanding of what this sudden, shocking development means for how we all relate to one another, and how we’ll make money, or navigate dating apps.

On that last note, here’s a video from a guy who’s worried about the combination of machine learning and dating apps, and the possibility of using ML to do a kind of hyper-potent catfishing where you slide into a potential hook-up’s DMs with the words of a bot that has been trained on billions of dating interactions and will know exactly what to say to this new match — AI-powered game:

Of course, he advertises this video as “how to get rich with AI” so people will watch it. I feel this, though. The posts I do about ethics, the law, or philosophy do way worse in terms of driving signups than anything that might give readers an insight into how to make things with AI or into what all this means for their earning potential.

👨⚖️ The lawsuits

The lawsuits over generative AI have started, so here’s a quick list of them:

The first two are run out of the same law office by the same, WSJ-headshot-having guy, Matthew Butterick. The two sites are both very slick, and the whole operation suggests that they’re doing a kind of franchise thing where there’s a formula and we’ll see a lot more of these coming.

This makes sense, since the models behind both Stable Diffusion and Copilot are the same fundamental technology, so arguments developed against one will fit the other. This multi-suit strategy, then, is about getting maximum ROI for the lawyers and doing maximum damage to generative AI across the board.

The third lawsuit, Getty Images, can be thought of as a kind of sequel to Getty’s famous suit against Google over scraping. As I said in my previous post, I think the scraping stuff has been mostly sorted — we all panicked about it very early on, but at this point, it’s pretty well settled into something everyone can live with.

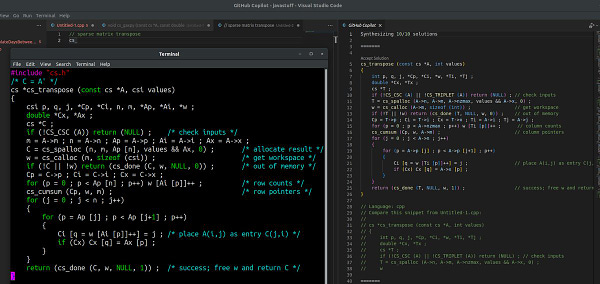

As for resources and further reading, here’s a counterpoint to the GitHub Copilot suit. I have some issues with some of the claims in here — in particular, I think this line of attack is going absolutely nowhere:

…the argument that the outputs of GitHub Copilot are derivative works of the training data is based on the assumption that a machine can produce works. This assumption is wrong and counterproductive. Copyright law has only ever applied to intellectual creations – where there is no creator, there is no work. This means that machine-generated code like that of GitHub Copilot is not a work under copyright law at all, so it is not a derivative work either. The output of a machine simply does not qualify for copyright protection – it is in the public domain. That is good news for the open movement and not something that needs fixing.

None of the arguments I’ve seen in either of the Butterick lawsuits rest on the idea that the model itself is the originator of the derivative work. I think Butterick would counter that the user of the model is the originator of the derivative work — that if you use Stable Diffusion to create a derivative work, then you’re the copyright holder of that derivative work, and you’re the one who owes royalties to copyright holders whose work your new piece is derived from.

But the author’s rants against the way the erstwhile “copyfighters” who’ve been pushing the GPL are now fighting Copilot because it’s reproducing their code sans GPL are spot-on. It was a shock to me when I saw, via some Verge reporting, that Copilot was being opposed on GPL grounds. The seems to be in clear violation of the spirit of copyleft, if not the letter.

👉 A tech lawyer on Twitter has a good, brief thread that makes a number of similar points to those I made in my two recent pieces on the Stability AI suit, but in a concise form that’s more shareable. Plus, he’s a lawyer, so bonus points for credibility:

🙄 As a counterpoint, here are two threads from an AI researcher who thinks Stability AI is in bad shape on this suit.

I don’t care about the second thread because I think “consent” is a dumb and flimsy framework on which to build a morality. I think consent is important in some narrow situations for some specific things, but appealing to it like some sacred universal principle that can form the bedrock of all human relations is stupid and I am not here for it. Maybe I’ll write more on this “consent” issue in some other venue.

To the narrow point of “consent” in this situation, I don’t think Emad and Stability AI should’ve had to get anyone’s “consent” to train an AI on work that’s displayed in public for the public to see (and to thereby train their own gray matter on). The appeal to “consent” in this situation strikes my ears the same way some stuff about the soul and the divine spark would strike the ears of an atheist — you’re just preaching to some choir that I’m not in.

Or, to put it in a way that’s more directly relevant to the guy’s argument, I care as little for his “consent” framework for analyzing the fairness of this as he does for Emad’s “centralization vs decentralization” framework for analyzing it. In fact, I’ll take Emad’s approach over his all day every day. He thinks the decentralizing frame is libertarian “virtue signaling”? Fine, but I think his “consent” frame is woke virtue signaling.

I found the above two threads via a post on another Substack, which I in turn found via a comment from the author on my previous post. I don’t think much of his Substack post or of his comment — not because he disagrees with me, but because it doesn’t add anything to the discussion and I didn’t learn anything from it. I may try to respond to it, though, if I get some time.

Posted elsewhere

I published a piece in Reason on the Stability AI lawsuit, so go check it out:

I also did a very quick hit for RETURN called, New AIs will need new types of institutions. What I published was actually the second, less interesting (to me) draft I did on this same topic. The first one had a whole bunch of stuff about monasteries, libraries, institutions, the humanities, and the history of textual scholarship, but it was all too much of a lift for my covid-weakened brain.

I’ll return to this set of issues many times this year, though, so more on that at a later date.

Some links and threads of note

💸 Microsoft may dump another $10 billion into OpenAI. I gave my own analysis of the Microsoft/OpenAI relationship in April 2021, and it still stands.

🤖 Yann Lecun and Jacob Browning take to NOEMA to ponder what AI might teach us about intelligence.

🤓 Tyler Cowen talks about AI.

Stunting AI development on copyright issues will provide an edge to cultures that are indifferent to copyright.